Global catastrophic risk

It has been suggested that Human extinction be merged into this article. (Discuss) Proposed since July 2021. |

A global catastrophic risk is a hypothetical future event that could damage human well-being on a global scale,[2] even endangering or destroying modern civilization.[3] An event that could cause human extinction or permanently and drastically curtail humanity's potential is known as an existential risk.[4]

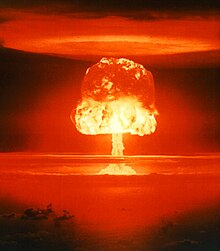

Potential global catastrophic risks include anthropogenic risks, caused by humans (technology, governance, climate change), and non-anthropogenic or natural risks.[3] Technological risks include the creation of destructive artificial intelligence, biotechnology or nanotechnology. Insufficient or malign global governance creates risks in the social and political domain, such as a global war, including nuclear holocaust, bioterrorism using genetically modified organisms, cyberterrorism destroying critical infrastructure like the electrical grid; or the failure to manage a natural pandemic. Problems and risks in the domain of earth system governance include global warming, environmental degradation, including extinction of species, famine as a result of non-equitable resource distribution, human overpopulation, crop failures and non-sustainable agriculture.

Examples of non-anthropogenic risks are an asteroid impact event, a supervolcanic eruption, a lethal gamma-ray burst, a geomagnetic storm destroying electronic equipment, natural long-term climate change, hostile extraterrestrial life, or the predictable Sun transforming into a red giant star engulfing the Earth.

Over the last two decades, a number of academic and non-profit organizations have been established to research global catastrophic and existential risks and formulate potential mitigation measures.[5][6][7][8]

Definition and classification[]

Defining global catastrophic risks[]

The term global catastrophic risk "lacks a sharp definition", and generally refers (loosely) to a risk that could inflict "serious damage to human well-being on a global scale".[10]

Humanity has suffered large catastrophes before. Some of these have caused serious damage, but were only local in scope—e.g. the Black Death may have resulted in the deaths of a third of Europe's population,[11] 10% of the global population at the time.[12] Some were global, but were not as severe—e.g. the 1918 influenza pandemic killed an estimated 3-6% of the world's population.[13] Most global catastrophic risks would not be so intense as to kill the majority of life on earth, but even if one did, the ecosystem and humanity would eventually recover (in contrast to existential risks).

Similarly, in Catastrophe: Risk and Response, Richard Posner singles out and groups together events that bring about "utter overthrow or ruin" on a global, rather than a "local or regional", scale. Posner highlights such events as worthy of special attention on cost–benefit grounds because they could directly or indirectly jeopardize the survival of the human race as a whole.[14]

Defining existential risks[]

Existential risks are defined as "risks that threaten the destruction of humanity's long-term potential."[15] The instantiation of an existential risk (an existential catastrophe[16]) would either cause outright human extinction or irreversibly lock in a drastically inferior state of affairs.[9][17] Existential risks are a sub-class of global catastrophic risks, where the damage is not only global, but also terminal and permanent (preventing recovery and thus impacting both the current and all subsequent generations).[9]

Non-extinction risks[]

While extinction is the most obvious way in which humanity's long-term potential could be destroyed, there are others, including unrecoverable collapse and unrecoverable dystopia.[18] A disaster severe enough to cause the permanent, irreversible collapse of human civilisation would constitute an existential catastrophe, even if it fell short of extinction.[18] Similarly, if humanity fell under a totalitarian regime, and there were no chance of recovery—as imagined by George Orwell in his 1949 novel Nineteen Eighty-Four[19]—such a dystopian future would also be an existential catastrophe.[20] Bryan Caplan writes that "perhaps an eternity of totalitarianism would be worse than extinction".[20] A dystopian scenario shares the key features of extinction and unrecoverable collapse of civilisation—before the catastrophe, humanity faced a vast range of bright futures to choose from; after the catastrophe, humanity is locked forever in a terrible state.[18]

Likelihood[]

Natural vs. anthropogenic[]

Experts generally agree that anthropogenic existential risks are (much) more likely than natural risks.[18][21][22][23][24] A key difference between these risk types is that empirical evidence can place an upper bound on the level of natural risk.[23] Humanity has existed for at least 200,000 years, over which it has been subject to a roughly constant level of natural risk. If the natural risk were high, then it would be highly unlikely that humanity would have survived as long as it has. Based on a formalization of this argument, researchers have concluded that we can be confident that natural risk is lower than 1 in 14,000 (and likely "less than one in 87,000") per year.[23]

Another empirical method to study the likelihood of certain natural risks is to investigate the geological record.[18] For example, a comet or asteroid impact event sufficient in scale to cause an impact winter that would cause human extinction before the year 2100 has been estimated at one-in-a-million.[25][26] Moreover, large supervolcano eruptions may cause a volcanic winter that could endanger the survival of humanity.[27] The geological record suggests that supervolcanic eruptions are estimated to occur on average about every 50,000 years, though most such eruptions would not reach the scale required to cause human extinction.[27] Famously, the supervolcano Mt. Toba may have almost wiped out humanity at the time of its last eruption (though this is contentious).[27][28]

Since anthropogenic risk is a relatively recent phenomenon, humanity's track record of survival cannot provide similar assurances.[23] Humanity has only survived 75 years since the creation of nuclear weapons, and for future technologies there is no track record at all. This has led thinkers like Carl Sagan to conclude that humanity is currently in a ‘time of perils’[29]—a uniquely dangerous period in human history, where it is subject to unprecedented levels of risk, beginning from when we first started posing risks to ourselves through our actions.[18][30]

Risk estimates[]

Given the limitations of ordinary observation and modeling, expert elicitation is frequently used instead to obtain probability estimates.[31] In 2008, an informal survey of experts at a conference hosted by the Future of Humanity Institute estimated a 19% risk of human extinction by the year 2100, though given the survey's limitations these results should be taken "with a grain of salt".[22]

Risk Estimated probability

for human extinction

before 2100Overall probability 19% Molecular nanotechnology weapons 5% Superintelligent AI 5% All wars (including civil wars) 4% Engineered pandemic 2% Nuclear war 1% Nanotechnology accident 0.5% Natural pandemic 0.05% Nuclear terrorism 0.03% Table source: Future of Humanity Institute, 2008.[22]

There have been a number of other estimates of existential risk, extinction risk, or a global collapse of civilisation:

- In 1996, John Leslie estimated a 30% risk over the next five centuries (equivalent to around 9% per century, on average).[32]

- In 2002, Nick Bostrom gave the following estimate of existential risk over the long term: ‘My subjective opinion is that setting this probability lower than 25% would be misguided, and the best estimate may be considerably higher.’[33]

- In 2003, Martin Rees estimated a 50% chance of collapse of civilisation in the twenty-first century.[34]

- The Global Challenges Foundation's 2016 annual report estimates an annual probability of human extinction of at least 0.05% per year.[35]

- A 2016 survey of AI experts found a median estimate of 5% that human-level AI would cause an outcome that was "extremely bad (e.g. human extinction)".[36]

- In 2020, Toby Ord estimates existential risk in the next century at ‘1 in 6’ in his book The Precipice: Existential Risk and the Future of Humanity.[18][37]

- Metaculus users currently estimate a 3% probability of humanity going extinct before 2100.[38]

Methodological challenges[]

Research into the nature and mitigation of global catastrophic risks and existential risks is subject to a unique set of challenges and consequently not easily subject to the usual standards of scientific rigour.[18] For instance, it is neither feasible nor ethical to study these risks experimentally. Carl Sagan expressed this with regards to nuclear war: “Understanding the long-term consequences of nuclear war is not a problem amenable to experimental verification”.[39] Moreover, many catastrophic risks change rapidly as technology advances and background conditions (such as international relations) change. Another challenge is the general difficulty of accurately predicting the future over long timescales, especially for athropogenic risks which depend on complex human political, economic and social systems.[18] In addition to known and tangible risks, unforeseeable black swan extinction events may occur, presenting an additional methodological problem.[18][40]

Lack of historical precedent[]

Humanity has never suffered an existential catastrophe and if one were to occur, it would necessarily be unprecedented.[18] Therefore, existential risks pose unique challenges to prediction, even more than other long-term events, because of observation selection effects.[41] Unlike with most events, the failure of a complete extinction event to occur in the past is not evidence against their likelihood in the future, because every world that has experienced such an extinction event has no observers, so regardless of their frequency, no civilization observes existential risks in its history.[41] These anthropic issues may partly be avoided by looking at evidence that does not have such selection effects, such as asteroid impact craters on the Moon, or directly evaluating the likely impact of new technology.[9]

To understand the dynamics of an unprecedented, unrecoverable global civilisational collapse (a type of existential risk), it may be instructive to study the various local civilizational collapses that have occurred throughout human history.[42] For instance, civilizations such as the Roman Empire have ended in a loss of centralized governance and a major civilization-wide loss of infrastructure and advanced technology. However, these examples demonstrate that societies are appeart to be fairly resilient to catastrophe; for example, Medieval Europe survived the Black Death without suffering anything resembling a civilization collapse despite losing 25 to 50 percent of its population.[43]

Incentives and coordination[]

There are economic reasons that can explain why so little effort is going into existential risk reduction. It is a global public good, so we should expect it to be undersupplied by markets.[9] Even if a large nation invests in risk mitigation measures, that nation will enjoy only a small fraction of the benefit of doing so. Furthermore, existential risk reduction is an intergenerational global public good, since most of the benefits of existential risk reduction would be enjoyed by future generations, and though these future people would in theory perhaps be willing to pay substantial sums for existential risk reduction, no mechanism for such a transaction exists.[9]

Cognitive biases[]

Numerous cognitive biases can influence people's judgment of the importance of existential risks, including scope insensitivity, hyperbolic discounting, availability heuristic, the conjunction fallacy, the affect heuristic, and the overconfidence effect.[44]

Scope insensitivity influences how bad people consider the extinction of the human race to be. For example, when people are motivated to donate money to altruistic causes, the quantity they are willing to give does not increase linearly with the magnitude of the issue: people are roughly as willing to prevent the deaths of 200,000 or 2,000 birds.[45] Similarly, people are often more concerned about threats to individuals than to larger groups.[44]

Moral importance of existential risk[]

In one of the earliest discussions of ethics of human extinction, Derek Parfit offers the following thought experiment:[46]

I believe that if we destroy mankind, as we now can, this outcome will be much worse than most people think. Compare three outcomes:

(1) Peace.

(2) A nuclear war that kills 99% of the world's existing population.

(3) A nuclear war that kills 100%.

(2) would be worse than (1), and (3) would be worse than (2). Which is the greater of these two differences? Most people believe that the greater difference is between (1) and (2). I believe that the difference between (2) and (3) is very much greater.

— Derek Parfit

The scale of what is lost in an existential catastrophe is determined by humanity's long-term potential—what humanity could expect to achieve if it survived.[18] From a utilitarian perspective, the value of protecting humanity is the product of its duration (how long humanity survives), its size (how many humans there are over time), and its quality (on average, how good is life for future people).[18]: 273 [47] On average, species survive for around a million years before going extinct. Parfit points out that the Earth will remain habitable for around a billion years.[46] And these might be lower bounds on our potential: if humanity is able to expand beyond Earth, it could greatly increase the human population and survive for trillions of years.[17][48]: 21 The size of the foregone potential that would be lost, were humanity to go extinct, is very large. Therefore, reducing existential risk by even a small amount would have a very significant moral value.[9][49]

Some economists and philosophers have defended views, including exponential discounting and person-affecting views of population ethics, on which future people do not matter (or matter much less), morally speaking.[50] While these views are controversial,[25][51][52] even they would agree that an existential catastrophe would be among the worst things imaginable. It would cut short the lives of eight billion presently existing people, destroying all of what makes their lives valuable, and most likely subjecting many of them to profound suffering. So even setting aside the value of future generations, there may be strong reasons to reduce existential risk, grounded in concern for presently existing people.[53]

Beyond utilitarianism, other moral perspectives lend support to the importance of reducing existential risk. An existential catastrophe would destroy more than just humanity—it would destroy all cultural artefacts, languages, and traditions, and many of the things we value.[18][54] So moral viewpoints on which we have duties to protect and cherish things of value would see this as a huge loss that should be avoided.[18] One can also consider reasons grounded in duties to past generations. For instance, Edmund Burke writes of a "partnership ... between those who are living, those who are dead, and those who are to be born".[55] If one takes seriously the debt humanity owes to past generations, Ord argues the best way of repaying it might be to 'pay it forward', and ensure that humanity's inheritance is passed down to future generations.[18]: 49–51

There are several economists who have discussed the importance of global catastrophic risks. For example, Martin Weitzman argues that most of the expected economic damage from climate change may come from the small chance that warming greatly exceeds the mid-range expectations, resulting in catastrophic damage.[56] Richard Posner has argued that humanity is doing far too little, in general, about small, hard-to-estimate risks of large-scale catastrophes.[57]

Potential sources of risk[]

Some sources of catastrophic risk are antropogenic (man-made), such as global warming,[58] environmental degradation, engineered pandemics and nuclear war.[59] On the other hand, some risks are non-anthropogenic or natural, such as meteor impacts or supervolcanoes.

Anthropogenic[]

Many experts—including those at the Future of Humanity Institute at the University of Oxford and the Centre for the Study of Existential Risk at the University of Cambridge—prioritize anthropogenic over natural risks due to their much greater estimated likelihood.[5][6][9][24] They are especially concerned by, and consequently focus on, risks posed by advanced technology, such as artificial intelligence and biotechnology.[60][61]

Artificial intelligence[]

It has been suggested that if AI systems rapidly become super-intelligent, they may take unforeseen actions or out-compete humanity.[62] According to philosopher Nick Bostrom, it is possible that the first super-intelligence to emerge would be able to bring about almost any possible outcome it valued, as well as to foil virtually any attempt to prevent it from achieving its objectives.[63] Thus, even a super-intelligence indifferent to humanity could be dangerous if it perceived humans as an obstacle to unrelated goals. In Bostrom's book Superintelligence, he defines this as the control problem.[64] Physicist Stephen Hawking, Microsoft founder Bill Gates, and SpaceX founder Elon Musk have echoed these concerns, with Hawking theorizing that such an AI could "spell the end of the human race".[65]

In 2009, the Association for the Advancement of Artificial Intelligence (AAAI) hosted a conference to discuss whether computers and robots might be able to acquire any sort of autonomy, and how much these abilities might pose a threat or hazard. They noted that some robots have acquired various forms of semi-autonomy, including being able to find power sources on their own and being able to independently choose targets to attack with weapons. They also noted that some computer viruses can evade elimination and have achieved "cockroach intelligence". They noted that self-awareness as depicted in science-fiction is probably unlikely, but there are other potential hazards and pitfalls.[66] Various media sources and scientific groups have noted separate trends in differing areas which might together result in greater robotic functionalities and autonomy, and which pose some inherent concerns.[67][68]

A survey of AI experts estimated that the chance of human-level machine learning having an "extremely bad (e.g., human extinction)" long-term effect on humanity is 5%.[69] A 2008 survey by the Future of Humanity Institute estimated a 5% probability of extinction by super-intelligence by 2100.[22] Eliezer Yudkowsky believes risks from artificial intelligence are harder to predict than any other known risks due to bias from anthropomorphism. Since people base their judgments of artificial intelligence on their own experience, he claims they underestimate the potential power of AI.[70]

Biotechnology[]

Biotechnology can pose a global catastrophic risk in the form of bioengineered organisms (viruses, bacteria, fungi, plants or animals). In many cases the organism will be a pathogen of humans, livestock, crops or other organisms we depend upon (e.g. pollinators or gut bacteria). However, any organism able to catastrophically disrupt ecosystem functions, e.g. highly competitive weeds, outcompeting essential crops, poses a biotechnology risk.

A biotechnology catastrophe may be caused by accidentally releasing a genetically engineered organism from controlled environments, by the planned release of such an organism which then turns out to have unforeseen and catastrophic interactions with essential natural or agro-ecosystems, or by intentional usage of biological agents in biological warfare or bioterrorism attacks.[71] Pathogens may be intentionally or unintentionally genetically modified to change virulence and other characteristics.[71] For example, a group of Australian researchers unintentionally changed characteristics of the mousepox virus while trying to develop a virus to sterilize rodents.[71] The modified virus became highly lethal even in vaccinated and naturally resistant mice.[72][73] The technological means to genetically modify virus characteristics are likely to become more widely available in the future if not properly regulated.[71]

Terrorist applications of biotechnology have historically been infrequent. To what extent this is due to a lack of capabilities or motivation is not resolved.[71] However, given current development, more risk from novel, engineered pathogens is to be expected in the future.[71] Exponential growth has been observed in the biotechnology sector, and Noun and Chyba predict that this will lead to major increases in biotechnological capabilities in the coming decades.[71] They argue that risks from biological warfare and bioterrorism are distinct from nuclear and chemical threats because biological pathogens are easier to mass-produce and their production is hard to control (especially as the technological capabilities are becoming available even to individual users).[71] In 2008, a survey by the Future of Humanity Institute estimated a 2% probability of extinction from engineered pandemics by 2100.[22]

Noun and Chyba propose three categories of measures to reduce risks from biotechnology and natural pandemics: Regulation or prevention of potentially dangerous research, improved recognition of outbreaks and developing facilities to mitigate disease outbreaks (e.g. better and/or more widely distributed vaccines).[71]

Cyberattack[]

Cyberattacks have the potential to destroy everything from personal data to electric grids. Christine Peterson, co-founder and past president of the Foresight Institute, believes a cyberattack on electric grids has the potential to be a catastrophic risk. She notes that little has been done to mitigate such risks, and that mitigation could take several decades of readjustment.[74]

Environmental disaster[]

An environmental or ecological disaster, such as world crop failure and collapse of ecosystem services, could be induced by the present trends of overpopulation, economic development, and non-sustainable agriculture. Most environmental scenarios involve one or more of the following: Holocene extinction event,[75] scarcity of water that could lead to approximately half the Earth's population being without safe drinking water, pollinator decline, overfishing, massive deforestation, desertification, climate change, or massive water pollution episodes. Detected in the early 21st century, a threat in this direction is colony collapse disorder,[76] a phenomenon that might foreshadow the imminent extinction[77] of the Western honeybee. As the bee plays a vital role in pollination, its extinction would severely disrupt the food chain.

An October 2017 report published in The Lancet stated that toxic air, water, soils, and workplaces were collectively responsible for nine million deaths worldwide in 2015, particularly from air pollution which was linked to deaths by increasing susceptibility to non-infectious diseases, such as heart disease, stroke, and lung cancer.[78] The report warned that the pollution crisis was exceeding "the envelope on the amount of pollution the Earth can carry" and "threatens the continuing survival of human societies".[78]

A May 2020 analysis published in Scientific Reports found that if deforestation and resource consumption continue at current rates they could culminate in a "catastrophic collapse in human population" and possibly "an irreversible collapse of our civilization" within the next several decades. The study says humanity should pass from a civilization dominated by the economy to a "cultural society" that "privileges the interest of the ecosystem above the individual interest of its components, but eventually in accordance with the overall communal interest." The authors also note that "while violent events, such as global war or natural catastrophic events, are of immediate concern to everyone, a relatively slow consumption of the planetary resources may be not perceived as strongly as a mortal danger for the human civilization."[79][80]

Experimental technology accident[]

Nick Bostrom suggested that in the pursuit of knowledge, humanity might inadvertently create a device that could destroy Earth and the Solar System.[81] Investigations in nuclear and high-energy physics could create unusual conditions with catastrophic consequences. For example, scientists worried that the first nuclear test might ignite the atmosphere.[82][83] Others worried that the RHIC[84] or the Large Hadron Collider might start a chain-reaction global disaster involving black holes, strangelets, or false vacuum states. These particular concerns have been challenged,[85][86][87][88] but the general concern remains.

Biotechnology could lead to the creation of a pandemic, chemical warfare could be taken to an extreme, nanotechnology could lead to grey goo in which out-of-control self-replicating robots consume all living matter on earth while building more of themselves—in both cases, either deliberately or by accident.[89]

Global warming[]

Global warming refers to the warming caused by human technology since the 19th century or earlier. Projections of future climate change suggest further global warming, sea level rise, and an increase in the frequency and severity of some extreme weather events and weather-related disasters. Effects of global warming include loss of biodiversity, stresses to existing food-producing systems, increased spread of known infectious diseases such as malaria, and rapid mutation of microorganisms. In November 2017, a statement by 15,364 scientists from 184 countries indicated that increasing levels of greenhouse gases from use of fossil fuels, human population growth, deforestation, and overuse of land for agricultural production, particularly by farming ruminants for meat consumption, are trending in ways that forecast an increase in human misery over coming decades.[3]

Mineral resource exhaustion[]

Romanian American economist Nicholas Georgescu-Roegen, a progenitor in economics and the paradigm founder of ecological economics, has argued that the carrying capacity of Earth—that is, Earth's capacity to sustain human populations and consumption levels—is bound to decrease sometime in the future as Earth's finite stock of mineral resources is presently being extracted and put to use; and consequently, that the world economy as a whole is heading towards an inevitable future collapse, leading to the demise of human civilization itself.[91]: 303f Ecological economist and steady-state theorist Herman Daly, a student of Georgescu-Roegen, has propounded the same argument by asserting that "... all we can do is to avoid wasting the limited capacity of creation to support present and future life [on Earth]."[92]: 370

Ever since Georgescu-Roegen and Daly published these views, various scholars in the field have been discussing the existential impossibility of allocating earth's finite stock of mineral resources evenly among an unknown number of present and future generations. This number of generations is likely to remain unknown to us, as there is no way—or only little way—of knowing in advance if or when mankind will ultimately face extinction. In effect, any conceivable intertemporal allocation of the stock will inevitably end up with universal economic decline at some future point.[93]: 253–256 [94]: 165 [95]: 168–171 [96]: 150–153 [97]: 106–109 [98]: 546–549 [99]: 142–145 [100]

Nanotechnology[]

Many nanoscale technologies are in development or currently in use.[101] The only one that appears to pose a significant global catastrophic risk is molecular manufacturing, a technique that would make it possible to build complex structures at atomic precision.[102] Molecular manufacturing requires significant advances in nanotechnology, but once achieved could produce highly advanced products at low costs and in large quantities in nanofactories of desktop proportions.[101][102] When nanofactories gain the ability to produce other nanofactories, production may only be limited by relatively abundant factors such as input materials, energy and software.[101]

Molecular manufacturing could be used to cheaply produce, among many other products, highly advanced, durable weapons.[101] Being equipped with compact computers and motors these could be increasingly autonomous and have a large range of capabilities.[101]

Chris Phoenix and Treder classify catastrophic risks posed by nanotechnology into three categories:

- From augmenting the development of other technologies such as AI and biotechnology.

- By enabling mass-production of potentially dangerous products that cause risk dynamics (such as arms races) depending on how they are used.

- From uncontrolled self-perpetuating processes with destructive effects.

Several researchers say the bulk of risk from nanotechnology comes from the potential to lead to war, arms races and destructive global government.[72][101][103] Several reasons have been suggested why the availability of nanotech weaponry may with significant likelihood lead to unstable arms races (compared to e.g. nuclear arms races):

- A large number of players may be tempted to enter the race since the threshold for doing so is low;[101]

- The ability to make weapons with molecular manufacturing will be cheap and easy to hide;[101]

- Therefore, lack of insight into the other parties' capabilities can tempt players to arm out of caution or to launch preemptive strikes;[101][104]

- Molecular manufacturing may reduce dependency on international trade,[101] a potential peace-promoting factor;

- Wars of aggression may pose a smaller economic threat to the aggressor since manufacturing is cheap and humans may not be needed on the battlefield.[101]

Since self-regulation by all state and non-state actors seems hard to achieve,[105] measures to mitigate war-related risks have mainly been proposed in the area of international cooperation.[101][106] International infrastructure may be expanded giving more sovereignty to the international level. This could help coordinate efforts for arms control. International institutions dedicated specifically to nanotechnology (perhaps analogously to the International Atomic Energy Agency IAEA) or general arms control may also be designed.[106] One may also jointly make differential technological progress on defensive technologies, a policy that players should usually favour.[101] The Center for Responsible Nanotechnology also suggests some technical restrictions.[107] Improved transparency regarding technological capabilities may be another important facilitator for arms-control.

Grey goo is another catastrophic scenario, which was proposed by Eric Drexler in his 1986 book Engines of Creation[108] and has been a theme in mainstream media and fiction.[109][110] This scenario involves tiny self-replicating robots that consume the entire biosphere using it as a source of energy and building blocks. Nowadays, however, nanotech experts—including Drexler—discredit the scenario. According to Phoenix, a "so-called grey goo could only be the product of a deliberate and difficult engineering process, not an accident".[111]

Warfare and mass destruction[]

The scenarios that have been explored most frequently are nuclear warfare and doomsday devices. Mistakenly launching a nuclear attack in response to a false alarm is one possible scenario; this nearly happened during the 1983 Soviet nuclear false alarm incident. Although the probability of a nuclear war per year is slim, Professor Martin Hellman has described it as inevitable in the long run; unless the probability approaches zero, inevitably there will come a day when civilization's luck runs out.[112] During the Cuban Missile Crisis, U.S. president John F. Kennedy estimated the odds of nuclear war at "somewhere between one out of three and even".[113] The United States and Russia have a combined arsenal of 14,700 nuclear weapons,[114] and there is an estimated total of 15,700 nuclear weapons in existence worldwide.[114] Beyond nuclear, other military threats to humanity include biological warfare (BW). By contrast, chemical warfare, while able to create multiple local catastrophes, is unlikely to create a global one.

Nuclear war could yield unprecedented human death tolls and habitat destruction. Detonating large numbers of nuclear weapons would have an immediate, short term and long-term effects on the climate, causing cold weather and reduced sunlight and photosynthesis[115] that may generate significant upheaval in advanced civilizations.[116] However, while popular perception sometimes takes nuclear war as "the end of the world", experts assign low probability to human extinction from nuclear war.[117][118] In 1982, Brian Martin estimated that a US–Soviet nuclear exchange might kill 400–450 million directly, mostly in the United States, Europe and Russia, and maybe several hundred million more through follow-up consequences in those same areas.[117] In 2008, a survey by the Future of Humanity Institute estimated a 4% probability of extinction from warfare by 2100, with a 1% chance of extinction from nuclear warfare.[22]

World population and agricultural crisis[]

The 20th century saw a rapid increase in human population due to medical developments and massive increases in agricultural productivity[119] such as the Green Revolution.[120] Between 1950 and 1984, as the Green Revolution transformed agriculture around the globe, world grain production increased by 250%. The Green Revolution in agriculture helped food production to keep pace with worldwide population growth or actually enabled population growth. The energy for the Green Revolution was provided by fossil fuels in the form of fertilizers (natural gas), pesticides (oil), and hydrocarbon-fueled irrigation.[121] David Pimentel, professor of ecology and agriculture at Cornell University, and Mario Giampietro, senior researcher at the National Research Institute on Food and Nutrition (INRAN), place in their 1994 study Food, Land, Population and the U.S. Economy the maximum U.S. population for a sustainable economy at 200 million. To achieve a sustainable economy and avert disaster, the United States must reduce its population by at least one-third, and world population will have to be reduced by two-thirds, says the study.[122]

The authors of this study believe the mentioned agricultural crisis will begin to have an effect on the world after 2020, and will become critical after 2050. Geologist Dale Allen Pfeiffer claims that coming decades could see spiraling food prices without relief and massive starvation on a global level such as never experienced before.[123][124]

Since supplies of petroleum and natural gas are essential to modern agriculture techniques, a fall in global oil supplies (see peak oil for global concerns) could cause spiking food prices and unprecedented famine in the coming decades.[125][126]

Wheat is humanity's third-most-produced cereal. Extant fungal infections such as Ug99[127] (a kind of stem rust) can cause 100% crop losses in most modern varieties. Little or no treatment is possible and infection spreads on the wind. Should the world's large grain-producing areas become infected, the ensuing crisis in wheat availability would lead to price spikes and shortages in other food products.[128]

Non-anthropogenic[]

Of all species that have ever lived, 99% have gone extinct.[129] Earth has experienced numerous mass extinction events, in which up to 96% of all species present at the time were eliminated.[129] A notable example is the K-T extinction event, which killed the dinosaurs. The types of threats posed by nature have been argued to be relatively constant, though this has been disputed.[130]

Asteroid impact[]

Several asteroids have collided with Earth in recent geological history. The Chicxulub asteroid, for example, was about six miles in diameter and is theorized to have caused the extinction of non-avian dinosaurs at the end of the Cretaceous. No sufficiently large asteroid currently exists in an Earth-crossing orbit; however, a comet of sufficient size to cause human extinction could impact the Earth, though the annual probability may be less than 10−8.[131] Geoscientist Brian Toon estimates that while a few people, such as "some fishermen in Costa Rica", could plausibly survive a six-mile meteorite, a sixty-mile meteorite would be large enough to "incinerate everybody".[132] Asteroids with around a 1 km diameter have impacted the Earth on average once every 500,000 years; these are probably too small to pose an extinction risk, but might kill billions of people.[131][133] Larger asteroids are less common. Small near-Earth asteroids are regularly observed and can impact anywhere on the Earth injuring local populations.[134] As of 2013, Spaceguard estimates it has identified 95% of all NEOs over 1 km in size.[135]

In April 2018, the B612 Foundation reported "It's a 100 per cent certain we'll be hit [by a devastating asteroid], but we're not 100 per cent sure when."[136] Also in 2018, physicist Stephen Hawking, in his final book Brief Answers to the Big Questions, considered an asteroid collision to be the biggest threat to the planet.[137][138][139] In June 2018, the US National Science and Technology Council warned that America is unprepared for an asteroid impact event, and has developed and released the "National Near-Earth Object Preparedness Strategy Action Plan" to better prepare.[140][141][142][143][144] According to expert testimony in the United States Congress in 2013, NASA would require at least five years of preparation before a mission to intercept an asteroid could be launched.[145]

Cosmic threats[]

A number of astronomical threats have been identified. Massive objects, e.g. a star, large planet or black hole, could be catastrophic if a close encounter occurred in the Solar System. In April 2008, it was announced that two simulations of long-term planetary movement, one at the Paris Observatory and the other at the University of California, Santa Cruz, indicate a 1% chance that Mercury's orbit could be made unstable by Jupiter's gravitational pull sometime during the lifespan of the Sun. Were this to happen, the simulations suggest a collision with Earth could be one of four possible outcomes (the others being Mercury colliding with the Sun, colliding with Venus, or being ejected from the Solar System altogether). If Mercury were to collide with Earth, all life on Earth could be obliterated entirely: an asteroid 15 km wide is believed to have caused the extinction of the non-avian dinosaurs, whereas Mercury is 4,879 km in diameter.[146]

If our universe lies within a false vacuum, a bubble of lower-energy vacuum could come to exist by chance or otherwise in our universe, and catalyze the conversion of our universe to a lower energy state in a volume expanding at nearly the speed of light, destroying all that we know without forewarning. Such an occurrence is called vacuum decay.[148][149]

Another cosmic threat is a gamma-ray burst, typically produced by a supernova when a star collapses inward on itself and then "bounces" outward in a massive explosion. Under certain circumstances, these events are thought to produce massive bursts of gamma radiation emanating outward from the axis of rotation of the star. If such an event were to occur oriented towards the Earth, the massive amounts of gamma radiation could significantly affect the Earth's atmosphere and pose an existential threat to all life. Such a gamma-ray burst may have been the cause of the Ordovician–Silurian extinction events. Neither this scenario nor the destabilization of Mercury's orbit are likely in the foreseeable future.[150]

A powerful solar flare or solar superstorm, which is a drastic and unusual decrease or increase in the Sun's power output, could have severe consequences for life on Earth.[151][152]

Astrophysicists currently calculate that in a few billion years the Earth will probably be swallowed by the expansion of the Sun into a red giant star.[153][154]

Extraterrestrial invasion[]

Intelligent extraterrestrial life, if existent, could invade Earth either to exterminate and supplant human life, enslave it under a colonial system, steal the planet's resources, or destroy the planet altogether.[155]

Although evidence of alien life has never been proven, scientists such as Carl Sagan have postulated that the existence of extraterrestrial life is very likely. In 1969, the "Extra-Terrestrial Exposure Law" was added to the United States Code of Federal Regulations (Title 14, Section 1211) in response to the possibility of biological contamination resulting from the U.S. Apollo Space Program. It was removed in 1991.[156] Scientists consider such a scenario technically possible, but unlikely.[157]

An article in The New York Times discussed the possible threats for humanity of intentionally sending messages aimed at extraterrestrial life into the cosmos in the context of the SETI efforts. Several renowned public figures such as Stephen Hawking and Elon Musk have argued against sending such messages on the grounds that extraterrestrial civilizations with technology are probably far more advanced than humanity and could pose an existential threat to humanity.[158]

Natural pandemic[]

There are numerous historical examples of pandemics[159] that have had a devastating effect on a large number of people. The present, unprecedented scale and speed of human movement make it more difficult than ever to contain an epidemic through local quarantines, and other sources of uncertainty and the evolving nature of the risk mean natural pandemics may pose a realistic threat to human civilization.[130]

There are several classes of argument about the likelihood of pandemics. One stems from history, where the limited size of historical pandemics is evidence that larger pandemics are unlikely. This argument has been disputed on grounds including the changing risk due to changing population and behavioral patterns among humans, the limited historical record, and the existence of an anthropic bias.[130]

Another argument is based on an evolutionary model that predicts that naturally evolving pathogens will ultimately develop an upper limit to their virulence.[160] This is because pathogens with high enough virulence quickly kill their hosts and reduce their chances of spreading the infection to new hosts or carriers.[161] This model has limits, however, because the fitness advantage of limited virulence is primarily a function of a limited number of hosts. Any pathogen with a high virulence, high transmission rate and long incubation time may have already caused a catastrophic pandemic before ultimately virulence is limited through natural selection. Additionally, a pathogen that infects humans as a secondary host and primarily infects another species (a zoonosis) has no constraints on its virulence in people, since the accidental secondary infections do not affect its evolution.[162] Lastly, in models where virulence level and rate of transmission are related, high levels of virulence can evolve.[163] Virulence is instead limited by the existence of complex populations of hosts with different susceptibilities to infection, or by some hosts being geographically isolated.[160] The size of the host population and competition between different strains of pathogens can also alter virulence.[164]

Neither of these arguments is applicable to bioengineered pathogens, and this poses entirely different risks of pandemics. Experts have concluded that "Developments in science and technology could significantly ease the development and use of high consequence biological weapons," and these "highly virulent and highly transmissible [bio-engineered pathogens] represent new potential pandemic threats."[165]

Natural climate change[]

Climate change refers to a lasting change in the Earth's climate. The climate has ranged from ice ages to warmer periods when palm trees grew in Antarctica. It has been hypothesized that there was also a period called "snowball Earth" when all the oceans were covered in a layer of ice. These global climatic changes occurred slowly, near the end of the last Major Ice Age when the climate became more stable. However, abrupt climate change on the decade time scale has occurred regionally. A natural variation into a new climate regime (colder or hotter) could pose a threat to civilization.[166][167]

In the history of the Earth, many ice ages are known to have occurred. An ice age would have a serious impact on civilization because vast areas of land (mainly in North America, Europe, and Asia) could become uninhabitable. Currently, the world is in an interglacial period within a much older glacial event. The last glacial expansion ended about 10,000 years ago, and all civilizations evolved later than this. Scientists do not predict that a natural ice age will occur anytime soon.[citation needed] The amount of heat-trapping gases emitted into Earth's oceans and atmosphere will prevent the next ice age, which otherwise would begin in around 50,000 years, and likely more glacial cycles.[168][169]

Volcanism[]

A geological event such as massive flood basalt, volcanism, or the eruption of a supervolcano[170] could lead to a so-called volcanic winter, similar to a nuclear winter. One such event, the Toba eruption,[171] occurred in Indonesia about 71,500 years ago. According to the Toba catastrophe theory,[172] the event may have reduced human populations to only a few tens of thousands of individuals. Yellowstone Caldera is another such supervolcano, having undergone 142 or more caldera-forming eruptions in the past 17 million years.[173] A massive volcano eruption would eject extraordinary volumes of volcanic dust, toxic and greenhouse gases into the atmosphere with serious effects on global climate (towards extreme global cooling: volcanic winter if short-term, and ice age if long-term) or global warming (if greenhouse gases were to prevail).

When the supervolcano at Yellowstone last erupted 640,000 years ago, the thinnest layers of the ash ejected from the caldera spread over most of the United States west of the Mississippi River and part of northeastern Mexico. The magma covered much of what is now Yellowstone National Park and extended beyond, covering much of the ground from Yellowstone River in the east to the Idaho falls in the west, with some of the flows extending north beyond Mammoth Springs.[174]

According to a recent study, if the Yellowstone caldera erupted again as a supervolcano, an ash layer one to three millimeters thick could be deposited as far away as New York, enough to "reduce traction on roads and runways, short out electrical transformers and cause respiratory problems". There would be centimeters of thickness over much of the U.S. Midwest, enough to disrupt crops and livestock, especially if it happened at a critical time in the growing season. The worst-affected city would likely be Billings, Montana, population 109,000, which the model predicted would be covered with ash estimated as 1.03 to 1.8 meters thick.[175]

The main long-term effect is through global climate change, which reduces the temperature globally by about 5–15 degrees C for a decade, together with the direct effects of the deposits of ash on their crops. A large supervolcano like Toba would deposit one or two meters thickness of ash over an area of several million square kilometers.(1000 cubic kilometers is equivalent to a one-meter thickness of ash spread over a million square kilometers). If that happened in some densely populated agricultural area, such as India, it could destroy one or two seasons of crops for two billion people.[176]

However, Yellowstone shows no signs of a supereruption at present, and it is not certain that a future supereruption will occur there.[177][178]

Research published in 2011 finds evidence that massive volcanic eruptions caused massive coal combustion, supporting models for the significant generation of greenhouse gases. Researchers have suggested that massive volcanic eruptions through coal beds in Siberia would generate significant greenhouse gases and cause a runaway greenhouse effect.[179] Massive eruptions can also throw enough pyroclastic debris and other material into the atmosphere to partially block out the sun and cause a volcanic winter, as happened on a smaller scale in 1816 following the eruption of Mount Tambora, the so-called Year Without a Summer. Such an eruption might cause the immediate deaths of millions of people several hundred miles from the eruption, and perhaps billions of death worldwide, due to the failure of the monsoons,[180] resulting in major crop failures causing starvation on a profound scale.[180]

A much more speculative concept is the verneshot: a hypothetical volcanic eruption caused by the buildup of gas deep underneath a craton. Such an event may be forceful enough to launch an extreme amount of material from the crust and mantle into a sub-orbital trajectory.

Proposed mitigation[]

Defense in depth is a useful framework for categorizing risk mitigation measures into three layers of defense:[181]

- Prevention: Reducing the probability of a catastrophe occurring in the first place. Example: Measures to prevent outbreaks of new highly-infectious diseases.

- Response: Preventing the scaling of a catastrophe to the global level. Example: Measures to prevent escalation of a small-scale nuclear exchange into an all-out nuclear war.

- Resilience: Increasing humanity's resilience (against extinction) when faced with global catastrophes. Example: Measures to increase food security during a nuclear winter.

Human extinction is most likely when all three defenses are weak, that is, "by risks we are unlikely to prevent, unlikely to successfully respond to, and unlikely to be resilient against".[181]

The unprecedented nature of existential risks poses a special challenge in designing risk mitigation measures since humanity will not be able to learn from a track record of previous events.[18]

Planetary management and respecting planetary boundaries have been proposed as approaches to preventing ecological catastrophes. Within the scope of these approaches, the field of geoengineering encompasses the deliberate large-scale engineering and manipulation of the planetary environment to combat or counteract anthropogenic changes in atmospheric chemistry. Space colonization is a proposed alternative to improve the odds of surviving an extinction scenario.[182] Solutions of this scope may require megascale engineering. Food storage has been proposed globally, but the monetary cost would be high. Furthermore, it would likely contribute to the current millions of deaths per year due to malnutrition.[183]

Some survivalists stock survival retreats with multiple-year food supplies.

The Svalbard Global Seed Vault is buried 400 feet (120 m) inside a mountain on an island in the Arctic. It is designed to hold 2.5 billion seeds from more than 100 countries as a precaution to preserve the world's crops. The surrounding rock is −6 °C (21 °F) (as of 2015) but the vault is kept at −18 °C (0 °F) by refrigerators powered by locally sourced coal.[184][185]

More speculatively, if society continues to function and if the biosphere remains habitable, calorie needs for the present human population might in theory be met during an extended absence of sunlight, given sufficient advance planning. Conjectured solutions include growing mushrooms on the dead plant biomass left in the wake of the catastrophe, converting cellulose to sugar, or feeding natural gas to methane-digesting bacteria.[186][187]

Global catastrophic risks and global governance[]

Insufficient global governance creates risks in the social and political domain, but the governance mechanisms develop more slowly than technological and social change. There are concerns from governments, the private sector, as well as the general public about the lack of governance mechanisms to efficiently deal with risks, negotiate and adjudicate between diverse and conflicting interests. This is further underlined by an understanding of the interconnectedness of global systemic risks.[188] In absence or anticipation of global governance, national governments can act individually to better understand, mitigate and prepare for global catastrophes.[189]

Climate emergency plans[]

In 2018, the Club of Rome called for greater climate change action and published its Climate Emergency Plan, which proposes ten action points to limit global average temperature increase to 1.5 degrees Celsius.[190] Further, in 2019, the Club published the more comprehensive Planetary Emergency Plan.[191]

Organizations[]

The Bulletin of the Atomic Scientists (est. 1945) is one of the oldest global risk organizations, founded after the public became alarmed by the potential of atomic warfare in the aftermath of WWII. It studies risks associated with nuclear war and energy and famously maintains the Doomsday Clock established in 1947. The Foresight Institute (est. 1986) examines the risks of nanotechnology and its benefits. It was one of the earliest organizations to study the unintended consequences of otherwise harmless technology gone haywire at a global scale. It was founded by K. Eric Drexler who postulated "grey goo".[192][193]

Beginning after 2000, a growing number of scientists, philosophers and tech billionaires created organizations devoted to studying global risks both inside and outside of academia.[194]

Independent non-governmental organizations (NGOs) include the Machine Intelligence Research Institute (est. 2000), which aims to reduce the risk of a catastrophe caused by artificial intelligence,[195] with donors including Peter Thiel and Jed McCaleb.[196] The Nuclear Threat Initiative (est. 2001) seeks to reduce global threats from nuclear, biological and chemical threats, and containment of damage after an event.[8] It maintains a nuclear material security index.[197] The Lifeboat Foundation (est. 2009) funds research into preventing a technological catastrophe.[198] Most of the research money funds projects at universities.[199] The Global Catastrophic Risk Institute (est. 2011) is a think tank for catastrophic risk. It is funded by the NGO Social and Environmental Entrepreneurs. The Global Challenges Foundation (est. 2012), based in Stockholm and founded by Laszlo Szombatfalvy, releases a yearly report on the state of global risks.[35][200] The Future of Life Institute (est. 2014) aims to support research and initiatives for safeguarding life considering new technologies and challenges facing humanity.[7] Elon Musk is one of its biggest donors.[201] The Center on Long-Term Risk (est. 2016), formerly known as the Foundational Research Institute, is a British organization focused on reducing risks of astronomical suffering (s-risks) from emerging technologies.[202]

University-based organizations include the Future of Humanity Institute (est. 2005) which researches the questions of humanity's long-term future, particularly existential risk.[5] It was founded by Nick Bostrom and is based at Oxford University.[5] The Centre for the Study of Existential Risk (est. 2012) is a Cambridge University-based organization which studies four major technological risks: artificial intelligence, biotechnology, global warming and warfare.[6] All are man-made risks, as Huw Price explained to the AFP news agency, "It seems a reasonable prediction that some time in this or the next century intelligence will escape from the constraints of biology". He added that when this happens "we're no longer the smartest things around," and will risk being at the mercy of "machines that are not malicious, but machines whose interests don't include us."[203] Stephen Hawking was an acting adviser. The Millennium Alliance for Humanity and the Biosphere is a Stanford University-based organization focusing on many issues related to global catastrophe by bringing together members of academic in the humanities.[204][205] It was founded by Paul Ehrlich among others.[206] Stanford University also has the Center for International Security and Cooperation focusing on political cooperation to reduce global catastrophic risk.[207] The Center for Security and Emerging Technology was established in January 2019 at Georgetown's Walsh School of Foreign Service and will focus on policy research of emerging technologies with an initial emphasis on artificial intelligence.[208] They received a grant of 55M USD from Good Ventures as suggested by the Open Philanthropy Project.[208]

Other risk assessment groups are based in or are part of governmental organizations. The World Health Organization (WHO) includes a division called the Global Alert and Response (GAR) which monitors and responds to global epidemic crisis.[209] GAR helps member states with training and coordination of response to epidemics.[210] The United States Agency for International Development (USAID) has its Emerging Pandemic Threats Program which aims to prevent and contain naturally generated pandemics at their source.[211] The Lawrence Livermore National Laboratory has a division called the Global Security Principal Directorate which researches on behalf of the government issues such as bio-security and counter-terrorism.[212]

History[]

Early history of thinking about human extinction[]

Before the 18th and 19th centuries, the possibility that humans or other organisms could go extinct was viewed with scepticism.[213] It contradicted the principle of plenitude, a doctrine that all possible things exist.[213] The principle traces back to Aristotle, and was an important tenet of Christian theology.[214]: 121 The doctrine was gradually undermined by evidence from the natural sciences, particular the discovery of fossil evidence of species that appeared to no longer exist, and the development of theories of evolution.[214]: 121 In On the Origin of Species, Darwin discussed the extinction of species as a natural process and core component of natural selection.[215] Notably, Darwin was skeptical of the possibility of sudden extinctions, viewing it as a gradual process. He held that the abrupt disappearance of species from the fossil record were not evidence of catastrophic extinctions, but rather were a function of unrecognised gaps in the record.[215]

As the possibility of extinction became more widely established in the sciences, so did the prospect of human extinction.[213] Beyond science, human extinction was explored in literature. The Romantic authors and poets were particularly interested in the topic.[213] Lord Byron wrote about the extinction of life on earth in his 1816 poem ‘Darkness’, and in 1824 envisaged humanity being threatened by a comet impact, and employing a missile system to defend against it.[216] Mary Shelley’s 1826 novel The Last Man is set in a world where humanity has been nearly destroyed by a mysterious plague.[216]

Atomic era[]

The invention of the atomic bomb prompted a wave of discussion about the risk of human extinction among scientists, intellectuals, and the public at large.[213] In a 1945 essay, Bertrand Russell wrote that "[T]he prospect for the human race is sombre beyond all precedent. Mankind are faced with a clear-cut alternative: either we shall all perish, or we shall have to acquire some slight degree of common sense."[217] A 1950 Gallup poll found that 19% of Americans believed that another world war would mean "an end to mankind".[218]

The discovery of 'nuclear winter' in the early 1980s, a specific mechanism by which nuclear war could result in human extinction, again raised the issue to prominence. Writing about these findings in 1983, Carl Sagan argued that measuring the badness of extinction solely in terms of those who die "conceals its full impact," and that nuclear war "imperils all of our descendants, for as long as there will be humans."[219]

Modern era[]

John Leslie's 1996 book The End of The World was an academic treatment of the science and ethics of human extinction. In it, Leslie considered a range of threats to humanity and what they have in common. In 2003, British Astronomer Royal Sir Martin Rees published Our Final Hour, in which he argues that advances in certain technologies create new threats for the survival of humankind, and that the 21st century may be a critical moment in history when humanity's fate is decided.[21] Edited by Nick Bostrom and Milan M. Ćirković, Global Catastrophic Risks was published in 2008, a collection of essays from 26 academics on various global catastrophic and existential risks.[220] Toby Ord's 2020 book The Precipice: Existential Risk and the Future of Humanity argues that preventing existential risks is one of the most important moral issues of our time. The book discusses, quantifies and compares different existential risks, concluding that the greatest risks are presented by unaligned artificial intelligence and biotechnology.[18]

See also[]

- Apocalyptic and post-apocalyptic fiction

- Artificial intelligence arms race

- Cataclysmic pole shift hypothesis

- Community resilience

- Degeneration

- Eschatology

- Extreme risk

- Failed state

- Fermi paradox

- Foresight (psychology)

- Future of Earth

- Future of the Solar System

- Global issue

- Global Risks Report

- Great Filter

- Holocene extinction

- Impact event

- List of global issues

- Nuclear proliferation

- Outside Context Problem

- Planetary boundaries

- Rare events

- The Sixth Extinction: An Unnatural History (nonfiction book)

- Societal collapse

- Speculative evolution: Studying hypothetical animals that could one day inhabit Earth after an existential catastrophe.

- Survivalism

- Tail risk

- Timeline of the far future

- Ultimate fate of the universe

- World Scientists' Warning to Humanity

Notes[]

- ^ Schulte, P.; et al. (5 March 2010). "The Chicxulub Asteroid Impact and Mass Extinction at the Cretaceous-Paleogene Boundary" (PDF). Science. 327 (5970): 1214–1218. Bibcode:2010Sci...327.1214S. doi:10.1126/science.1177265. PMID 20203042. S2CID 2659741.

- ^ Bostrom, Nick (2008). Global Catastrophic Risks (PDF). Oxford University Press. p. 1.

- ^ Jump up to: a b c Ripple WJ, Wolf C, Newsome TM, Galetti M, Alamgir M, Crist E, Mahmoud MI, Laurance WF (13 November 2017). "World Scientists' Warning to Humanity: A Second Notice". BioScience. 67 (12): 1026–1028. doi:10.1093/biosci/bix125.

- ^ Bostrom, Nick (March 2002). "Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards". Journal of Evolution and Technology. 9.

- ^ Jump up to: a b c d "About FHI". Future of Humanity Institute. Retrieved 2021-08-12.

- ^ Jump up to: a b c "About us". Centre for the Study of Existential Risk. Retrieved 2021-08-12.

- ^ Jump up to: a b "The Future of Life Institute". Future of Life Institute. Retrieved May 5, 2014.

- ^ Jump up to: a b "Nuclear Threat Initiative". Nuclear Threat Initiative. Retrieved June 5, 2015.

- ^ Jump up to: a b c d e f g h Bostrom, Nick (2013). "Existential Risk Prevention as Global Priority" (PDF). Global Policy. 4 (1): 15–3. doi:10.1111/1758-5899.12002 – via Existential Risk.

- ^ Bostrom, Nick; Cirkovic, Milan (2008). Global Catastrophic Risks. Oxford: Oxford University Press. p. 1. ISBN 978-0-19-857050-9.

- ^ Ziegler, Philip (2012). The Black Death. Faber and Faber. p. 397. ISBN 9780571287116.

- ^ Muehlhauser, Luke (15 March 2017). "How big a deal was the Industrial Revolution?". lukemuelhauser.com. Retrieved 3 August 2020.

- ^ Taubenberger, Jeffery; Morens, David (2006). "1918 Influenza: the Mother of All Pandemics". Emerging Infectious Diseases. 12 (1): 15–22. doi:10.1257/jep.24.2.163. PMC 3291398. PMID 16494711.

- ^ Posner, Richard A. (2006). Catastrophe: Risk and Response. Oxford: Oxford University Press. ISBN 978-0195306477. Introduction, "What is Catastrophe?"

- ^ Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. New York: Hachette. ISBN 9780316484916.

This is an equivalent, though crisper statement of Nick Bostrom's definition: "An existential risk is one that threatens the premature extinction of Earth-originating intelligent life or the permanent and drastic destruction of its potential for desirable future development." Source: Bostrom, Nick (2013). "Existential Risk Prevention as Global Priority". Global Policy. 4:15-31.

- ^ Cotton-Barratt, Owen; Ord, Toby (2015), Existential risk and existential hope: Definitions (PDF), Future of Humanity Institute – Technical Report #2015-1, pp. 1–4

- ^ Jump up to: a b Bostrom, Nick (2009). "Astronomical Waste: The opportunity cost of delayed technological development". Utilitas. 15 (3): 308–314. CiteSeerX 10.1.1.429.2849. doi:10.1017/s0953820800004076. S2CID 15860897.

- ^ Jump up to: a b c d e f g h i j k l m n o p q r Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. New York: Hachette. ISBN 9780316484916.

- ^ Orwell, George (1949). Nineteen Eighty-Four. A novel. London: Secker & Warburg.

- ^ Jump up to: a b Bryan Caplan (2008). "The totalitarian threat". Global Catastrophic Risks, eds. Bostrom & Cirkovic (Oxford University Press): 504-519. ISBN 9780198570509

- ^ Jump up to: a b Reese, Martin (2003). Our Final Hour: A Scientist's Warning: How Terror, Error, and Environmental Disaster Threaten Humankind's Future In This Century - On Earth and Beyond. Basic Books. ISBN 0-465-06863-4.

- ^ Jump up to: a b c d e f Bostrom, Nick; Sandberg, Anders (2008). "Global Catastrophic Risks Survey" (PDF). FHI Technical Report #2008-1. Future of Humanity Institute.

- ^ Jump up to: a b c d Snyder-Beattie, Andrew E.; Ord, Toby; Bonsall, Michael B. (2019-07-30). "An upper bound for the background rate of human extinction". Scientific Reports. 9 (1): 11054. doi:10.1038/s41598-019-47540-7. ISSN 2045-2322. PMC 6667434. PMID 31363134.

- ^ Jump up to: a b

"Frequently Asked Questions". Existential Risk. Future of Humanity Institute. Retrieved 26 July 2013.

The great bulk of existential risk in the foreseeable future is anthropogenic; that is, arising from human activity.

- ^ Jump up to: a b Matheny, Jason Gaverick (2007). "Reducing the Risk of Human Extinction" (PDF). Risk Analysis. 27 (5): 1335–1344. doi:10.1111/j.1539-6924.2007.00960.x. PMID 18076500.

- ^ Asher, D.J.; Bailey, M.E.; Emel'yanenko, V.; Napier, W.M. (2005). "Earth in the cosmic shooting gallery" (PDF). The Observatory. 125: 319–322. Bibcode:2005Obs...125..319A.

- ^ Jump up to: a b c Rampino, M.R.; Ambrose, S.H. (2002). "Super eruptions as a threat to civilizations on Earth-like planets" (PDF). Icarus. 156 (2): 562–569. Bibcode:2002Icar..156..562R. doi:10.1006/icar.2001.6808.

- ^ Yost, Chad L.; Jackson, Lily J.; Stone, Jeffery R.; Cohen, Andrew S. (2018-03-01). "Subdecadal phytolith and charcoal records from Lake Malawi, East Africa imply minimal effects on human evolution from the ∼74 ka Toba supereruption". Journal of Human Evolution. 116: 75–94. doi:10.1016/j.jhevol.2017.11.005. ISSN 0047-2484. PMID 29477183.

- ^ Sagan, Carl (1994). Pale Blue Dot. Random House. pp. 305–6. ISBN 0-679-43841-6.

Some planetary civilizations see their way through, place limits on what may and what must not be done, and safely pass through the time of perils. Others are not so lucky or so prudent, perish.

- ^ Parfit, Derek (2011). On What Matters Vol. 2. Oxford University Press. p. 616. ISBN 9780199681044.

We live during the hinge of history ... If we act wisely in the next few centuries, humanity will survive its most dangerous and decisive period.

- ^ Rowe, Thomas; Beard, Simon (2018). "Probabilities, methodologies and the evidence base in existential risk assessments" (PDF). Working Paper, Centre for the Study of Existential Risk. Retrieved 26 August 2018.

- ^ Leslie, John (1996). The End of the World: The Science and Ethics of Human Extinction. Routledge. p. 146.

- ^ Bostrom, Nick (2002), "Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards", Journal of Evolution and Technology, 9

- ^ Rees, Martin (2004) [2003]. Our Final Century. Arrow Books. p. 9.

- ^ Jump up to: a b Meyer, Robinson (April 29, 2016). "Human Extinction Isn't That Unlikely". The Atlantic. Boston, Massachusetts: Emerson Collective. Retrieved April 30, 2016.

- ^ Grace, Katja; Salvatier, John; Dafoe, Allen; Zhang, Baobao; Evans, Owain (3 May 2018). "When Will AI Exceed Human Performance? Evidence from AI Experts". arXiv:1705.08807 [cs.AI].

- ^ Purtill, Corinne. "How Close Is Humanity to the Edge?". The New Yorker. Retrieved 2021-01-08.

- ^ "Will humans go extinct by 2100?". Metaculus. Retrieved 2021-08-12.

- ^ Sagan, Carl (Winter 1983). "Nuclear War and Climatic Catastrophe: Some Policy Implications". Foreign Affairs. Council on Foreign Relations. doi:10.2307/20041818. JSTOR 20041818. Retrieved 4 August 2020.

- ^ Jebari, Karim (2014). "Existential Risks: Exploring a Robust Risk Reduction Strategy" (PDF). Science and Engineering Ethics. 21 (3): 541–54. doi:10.1007/s11948-014-9559-3. PMID 24891130. S2CID 30387504. Retrieved 26 August 2018.

- ^ Jump up to: a b Cirkovic, Milan M.; Bostrom, Nick; Sandberg, Anders (2010). "Anthropic Shadow: Observation Selection Effects and Human Extinction Risks" (PDF). Risk Analysis. 30 (10): 1495–1506. doi:10.1111/j.1539-6924.2010.01460.x. PMID 20626690.

- ^ Kemp, Luke (February 2019). "Are we on the road to civilisation collapse?". BBC. Retrieved 2021-08-12.

- ^ Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. ISBN 9780316484893.

Europe survived losing 25 to 50 percent of its population in the Black Death, while keeping civilization firmly intact

- ^ Jump up to: a b Yudkowsky, Eliezer (2008). "Cognitive Biases Potentially Affecting Judgment of Global Risks" (PDF). Global Catastrophic Risks: 91–119. Bibcode:2008gcr..book...86Y.

- ^ Desvousges, W.H., Johnson, F.R., Dunford, R.W., Boyle, K.J., Hudson, S.P., and Wilson, N. 1993, Measuring natural resource damages with contingent valuation: tests of validity and reliability. In Hausman, J.A. (ed), Contingent Valuation:A Critical Assessment, pp. 91–159 (Amsterdam: North Holland).

- ^ Jump up to: a b Parfit, Derek (1984). Reasons and Persons. Oxford University Press. pp. 453–454.

- ^ MacAskill, William; Yetter Chappell, Richard (2021). "Population Ethics | Practical Implications of Population Ethical Theories". Introduction to Utilitarianism. Retrieved 2021-08-12.

- ^ Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. New York: Hachette. ISBN 9780316484916.

- ^ Todd, Benjamin (2017). "The case for reducing existential risks". 80,000 Hours. Retrieved January 8, 2020.

- ^ Narveson, Jan (1973). "Moral Problems of Population". The Monist. 57 (1): 62–86. doi:10.5840/monist197357134. PMID 11661014.

- ^ Greaves, Hilary (2017). "Discounting for Public Policy: A Survey". Economics & Philosophy. 33 (3): 391–439. doi:10.1017/S0266267117000062. ISSN 0266-2671. S2CID 21730172.

- ^ Greaves, Hilary (2017). "Population axiology". Philosophy Compass. 12 (11): e12442. doi:10.1111/phc3.12442. ISSN 1747-9991.

- ^ Lewis, Gregory (23 May 2018). "The person-affecting value of existential risk reduction". www.gregoryjlewis.com. Retrieved 7 August 2020.

- ^ Sagan, Carl (Winter 1983). "Nuclear War and Climatic Catastrophe: Some Policy Implications". Foreign Affairs. Council on Foreign Relations. doi:10.2307/20041818. JSTOR 20041818. Retrieved 4 August 2020.

- ^ Burke, Edmund (1999) [1790]. "Reflections on the Revolution in France" (PDF). In Canavan, Francis (ed.). Select Works of Edmund Burke Volume 2. Liberty Fund. p. 192.

- ^ Weitzman, Martin (2009). "On modeling and interpreting the economics of catastrophic climate change" (PDF). The Review of Economics and Statistics. 91 (1): 1–19. doi:10.1162/rest.91.1.1. S2CID 216093786.

- ^ Posner, Richard (2004). Catastrophe: Risk and Response. Oxford University Press.

- ^ IPCC (11 November 2013): D. "Understanding the Climate System and its Recent Changes", in: Summary for Policymakers (finalized version) Archived 2017-03-09 at the Wayback Machine, in: IPCC AR5 WG1 2013, p. 13

- ^ "Global Catastrophic Risks: a summary". Centre for the Study of Existential Risk. 2019-08-11.

- ^ "Research Areas". Future of Humanity Institute. Retrieved 2021-08-12.

- ^ "Research". Centre for the Study of Existential Risk. Retrieved 2021-08-12.

- ^ Bill Joy, Why the future doesn't need us. Wired magazine.

- ^ Nick Bostrom 2002 "Ethical Issues in Advanced Artificial Intelligence"

- ^ Bostrom, Nick. Superintelligence: Paths, Dangers, Strategies.

- ^ Rawlinson, Kevin (2015-01-29). "Microsoft's Bill Gates insists AI is a threat". BBC News. Retrieved 30 January 2015.

- ^ Scientists Worry Machines May Outsmart Man by John Markoff, The New York Times, July 26, 2009.

- ^ Gaming the Robot Revolution: A military technology expert weighs in on Terminator: Salvation., By P. W. Singer, slate.com Thursday, May 21, 2009.

- ^ robot page, engadget.com.

- ^ Grace, Katja (2017). "When Will AI Exceed Human Performance? Evidence from AI Experts". Journal of Artificial Intelligence Research. arXiv:1705.08807. Bibcode:2017arXiv170508807G.

- ^ Yudkowsky, Eliezer (2008). Artificial Intelligence as a Positive and Negative Factor in Global Risk. Bibcode:2008gcr..book..303Y. Retrieved 26 July 2013.

- ^ Jump up to: a b c d e f g h i Ali Noun; Christopher F. Chyba (2008). "Chapter 20: Biotechnology and biosecurity". In Bostrom, Nick; Cirkovic, Milan M. (eds.). Global Catastrophic Risks. Oxford University Press.

- ^ Jump up to: a b Sandberg, Anders. "The five biggest threats to human existence". theconversation.com. Retrieved 13 July 2014.

- ^ Jackson, Ronald J.; Ramsay, Alistair J.; Christensen, Carina D.; Beaton, Sandra; Hall, Diana F.; Ramshaw, Ian A. (2001). "Expression of Mouse Interleukin-4 by a Recombinant Ectromelia Virus Suppresses Cytolytic Lymphocyte Responses and Overcomes Genetic Resistance to Mousepox". Journal of Virology. 75 (3): 1205–1210. doi:10.1128/jvi.75.3.1205-1210.2001. PMC 114026. PMID 11152493.

- ^ UCLA Engineering (June 28, 2017). "Scholars assess threats to civilization, life on Earth". UCLA. Retrieved June 30, 2017.

- ^ Graham, Chris (July 11, 2017). "Earth undergoing sixth 'mass extinction' as humans spur 'biological annihilation' of wildlife". The Telegraph. Retrieved October 20, 2017.

- ^ Evans-Pritchard, Ambrose (6 February 2011). "Einstein was right - honey bee collapse threatens global food security". The Daily Telegraph. London.

- ^ Lovgren, Stefan. "Mystery Bee Disappearances Sweeping U.S." National Geographic News. URL accessed March 10, 2007.

- ^ Jump up to: a b Carrington, Damian (20 October 2017). "Global pollution kills 9m a year and threatens 'survival of human societies'". The Guardian. London, UK. Retrieved 20 October 2017.

- ^ Nafeez, Ahmed. "Theoretical Physicists Say 90% Chance of Societal Collapse Within Several Decades". Vice. Retrieved 2 August 2021.

- ^ Bologna, M.; Aquino, G. (2020). "Deforestation and world population sustainability: a quantitative analysis". Scientific Reports. 10 (7631): 7631. doi:10.1038/s41598-020-63657-6. PMC 7203172. PMID 32376879.

- ^ Bostrom 2002, section 4.8

- ^ Richard Hamming (1998). "Mathematics on a Distant Planet". The American Mathematical Monthly. 105 (7): 640–650. doi:10.1080/00029890.1998.12004938. JSTOR 2589247.

- ^ "Report LA-602, Ignition of the Atmosphere With Nuclear Bombs" (PDF). Retrieved 2011-10-19.

- ^ New Scientist, 28 August 1999: "A Black Hole Ate My Planet"

- ^ Konopinski, E. J; Marvin, C.; Teller, Edward (1946). "Ignition of the Atmosphere with Nuclear Bombs" (PDF) (Declassified February 1973) (LA–602). Los Alamos National Laboratory. Retrieved 23 November 2008. Cite journal requires

|journal=(help) - ^ "Statement by the Executive Committee of the DPF on the Safety of Collisions at the Large Hadron Collider." Archived 2009-10-24 at the Wayback Machine

- ^ "Safety at the LHC". Archived from the original on 2008-05-13. Retrieved 2008-06-18.

- ^ J. Blaizot et al., "Study of Potentially Dangerous Events During Heavy-Ion Collisions at the LHC", CERN library record CERN Yellow Reports Server (PDF)

- ^ Eric Drexler, Engines of Creation, ISBN 0-385-19973-2, available online

- ^ "Hint to Coal Consumers". The Selma Morning Times. Selma, Alabama, US. October 15, 1902. p. 4.

- ^ Georgescu-Roegen, Nicholas (1971). The Entropy Law and the Economic Process (Full book accessible in three parts at Scribd). Cambridge, Massachusetts: Harvard University Press. ISBN 978-0674257801.

- ^ Daly, Herman E., ed. (1980). Economics, Ecology, Ethics. Essays Towards a Steady-State Economy (PDF contains only the introductory chapter of the book) (2nd ed.). San Francisco: W.H. Freeman and Company. ISBN 978-0716711780.

- ^ Rifkin, Jeremy (1980). Entropy: A New World View (PDF). New York: The Viking Press. ISBN 978-0670297177. Archived from the original (PDF contains only the title and contents pages of the book) on 2016-10-18.

- ^ Boulding, Kenneth E. (1981). Evolutionary Economics. Beverly Hills: Sage Publications. ISBN 978-0803916487.

- ^ Martínez-Alier, Juan (1987). Ecological Economics: Energy, Environment and Society. Oxford: Basil Blackwell. ISBN 978-0631171461.

- ^ Gowdy, John M.; Mesner, Susan (1998). "The Evolution of Georgescu-Roegen's Bioeconomics" (PDF). Review of Social Economy. 56 (2): 136–156. doi:10.1080/00346769800000016.

- ^ Schmitz, John E.J. (2007). The Second Law of Life: Energy, Technology, and the Future of Earth As We Know It (Author's science blog, based on his textbook). Norwich: William Andrew Publishing. ISBN 978-0815515371.