Hypertext Transfer Protocol

| |

| International standard | RFC 1945 HTTP/1.0 (1996) RFC 2068 HTTP/1.1 (1997) |

|---|---|

| Developed by | initially CERN; IETF, W3C |

| Introduced | 1991 |

| HTTP |

|---|

|

| Request methods |

|

| Header fields |

|

| Response status codes |

| Security access control methods |

|

| Security vulnerabilities |

| Internet protocol suite |

|---|

| Application layer |

| Transport layer |

|

| Internet layer |

|

| Link layer |

|

The Hypertext Transfer Protocol (HTTP) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems.[1] HTTP is the foundation of data communication for the World Wide Web, where hypertext documents include hyperlinks to other resources that the user can easily access, for example by a mouse click or by tapping the screen in a web browser.

Development of HTTP was initiated by Tim Berners-Lee at CERN in 1989. Development of early HTTP Requests for Comments (RFCs) was a coordinated effort by the Internet Engineering Task Force (IETF) and the World Wide Web Consortium (W3C), with work later moving to the IETF.

HTTP/1 was first documented (as version 1.1) in 1997.[2]

HTTP/2 is a more efficient expression of HTTP's semantics "on the wire", and was published in 2015, and is used by 45% of websites; it is now supported by virtually all web browsers[3] and major web servers over Transport Layer Security (TLS) using an Application-Layer Protocol Negotiation (ALPN) extension[4] where TLS 1.2 or newer is required.[5][6]

HTTP/3 is the proposed successor to HTTP/2,[7][8] and two-thirds of web browser users (both on desktop and mobile) can already use HTTP/3, on the 20% of websites that already support it; it uses QUIC instead of TCP for the underlying transport protocol. Like HTTP/2, it does not obsolete previous major versions of the protocol. Support for HTTP/3 was added to Cloudflare and Google Chrome first,[9][10] and is also enabled in Firefox.[11]

Technical overview[]

HTTP functions as a request–response protocol in the client–server computing model. A web browser, for example, may be the client and an application running on a computer hosting a website may be the server. The client submits an HTTP request message to the server. The server, which provides resources such as HTML files and other content, or performs other functions on behalf of the client, returns a response message to the client. The response contains completion status information about the request and may also contain requested content in its message body.

A web browser is an example of a user agent (UA). Other types of user agent include the indexing software used by search providers (web crawlers), voice browsers, mobile apps, and other software that accesses, consumes, or displays web content.

HTTP is designed to permit intermediate network elements to improve or enable communications between clients and servers. High-traffic websites often benefit from web cache servers that deliver content on behalf of upstream servers to improve response time. Web browsers cache previously accessed web resources and reuse them, when possible, to reduce network traffic. HTTP proxy servers at private network boundaries can facilitate communication for clients without a globally routable address, by relaying messages with external servers.

HTTP is an application layer protocol designed within the framework of the Internet protocol suite. Its definition presumes an underlying and reliable transport layer protocol,[12] and Transmission Control Protocol (TCP) is commonly used. However, HTTP can be adapted to use unreliable protocols such as the User Datagram Protocol (UDP), for example in HTTPU and Simple Service Discovery Protocol (SSDP).

HTTP resources are identified and located on the network by Uniform Resource Locators (URLs), using the Uniform Resource Identifiers (URI's) schemes http and https. As defined in RFC 3986, URIs are encoded as hyperlinks in HTML documents, so as to form interlinked hypertext documents.

HTTP/1.1 is a revision of the original HTTP (HTTP/1.0). In HTTP/1.0 a separate connection to the same server is made for every resource request. HTTP/1.1 can reuse a connection multiple times to download images, scripts, stylesheets, etc after the page has been delivered. HTTP/1.1 communications therefore experience less latency as the establishment of TCP connections presents considerable overhead.[13]

History[]

The term hypertext was coined by Ted Nelson in 1965 in the Xanadu Project, which was in turn inspired by Vannevar Bush's 1930s vision of the microfilm-based information retrieval and management "memex" system described in his 1945 essay "As We May Think". Tim Berners-Lee and his team at CERN are credited with inventing the original HTTP, along with HTML and the associated technology for a web server and a text-based web browser. Berners-Lee first proposed the "WorldWideWeb" project in 1989—now known as the World Wide Web. The first web server went live in 1990.[14][15] The protocol used had only one method, namely GET, which would request a page from a server.[16] The response from the server was always an HTML page.[17]

The first documented version of HTTP was HTTP version 0.9 (1991). Dave Raggett led the HTTP Working Group (HTTP WG) in 1995 and wanted to expand the protocol with extended operations, extended negotiation, richer meta-information, tied with a security protocol which became more efficient by adding additional methods and header fields.[18][19] RFC 1945 officially introduced and recognized HTTP version 1.0 in 1996.

The HTTP WG planned to publish new standards in December 1995[20] and the support for pre-standard HTTP/1.1 based on the then developing RFC 2068 (called HTTP-NG) was rapidly adopted by the major browser developers in early 1996. End-user adoption of the new browsers was rapid. In March 1996, one web hosting company reported that over 40% of browsers in use on the Internet were HTTP 1.1 compliant. That same web hosting company reported that by June 1996, 65% of all browsers accessing their servers were HTTP/1.1 compliant.[21] The HTTP/1.1 standard as defined in RFC 2068 was officially released in January 1997. Improvements and updates to the HTTP/1.1 standard were released under RFC 2616 in June 1999.

In 2007, the HTTP Working Group was formed, in part, to revise and clarify the HTTP/1.1 specification. In June 2014, the WG released an updated six-part specification obsoleting RFC 2616:

- RFC 7230, HTTP/1.1: Message Syntax and Routing

- RFC 7231, HTTP/1.1: Semantics and Content

- RFC 7232, HTTP/1.1: Conditional Requests

- RFC 7233, HTTP/1.1: Range Requests

- RFC 7234, HTTP/1.1: Caching

- RFC 7235, HTTP/1.1: Authentication

HTTP/2 was published as RFC 7540 in May 2015.

| Year | Version |

|---|---|

| 1991 | HTTP/0.9 |

| 1996 | HTTP/1.0 |

| 1997 | HTTP/1.1 |

| 2015 | HTTP/2 |

| 2020 (draft) | HTTP/3 |

HTTP session[]

An HTTP session is a sequence of network request–response transactions. An HTTP client initiates a request by establishing a Transmission Control Protocol (TCP) connection to a particular port on a server (typically port 80, occasionally port 8080; see List of TCP and UDP port numbers). An HTTP server listening on that port waits for a client's request message. Upon receiving the request, the server sends back a status line, such as "HTTP/1.1 200 OK", and a message of its own. The body of this message is typically the requested resource, although an error message or other information may also be returned.[1]

Persistent connections[]

In HTTP/0.9 and 1.0, the connection is closed after a single request/response pair. In HTTP/1.1 a keep-alive-mechanism was introduced, where a connection could be reused for more than one request. Such persistent connections reduce request latency perceptibly because the client does not need to re-negotiate the TCP 3-Way-Handshake connection after the first request has been sent. Another positive side effect is that, in general, the connection becomes faster with time due to TCP's slow-start-mechanism.

Version 1.1 of the protocol also made bandwidth optimization improvements to HTTP/1.0. For example, HTTP/1.1 introduced chunked transfer encoding to allow content on persistent connections to be streamed rather than buffered. HTTP pipelining further reduces lag time, allowing clients to send multiple requests before waiting for each response. Another addition to the protocol was byte serving, where a server transmits just the portion of a resource explicitly requested by a client.

HTTP session state[]

HTTP is a stateless protocol. A stateless protocol does not require the HTTP server to retain information or status about each user for the duration of multiple requests. However, some web applications implement states or server side sessions using for instance HTTP cookies or hidden variables within web forms.

HTTP authentication[]

HTTP provides multiple authentication schemes such as basic access authentication and digest access authentication which operate via a challenge–response mechanism whereby the server identifies and issues a challenge before serving the requested content.

HTTP provides a general framework for access control and authentication, via an extensible set of challenge–response authentication schemes, which can be used by a server to challenge a client request and by a client to provide authentication information.[22]

Authentication realms[]

The HTTP Authentication specification also provides an arbitrary, implementation-specific construct for further dividing resources common to a given root URI. The realm value string, if present, is combined with the canonical root URI to form the protection space component of the challenge. This in effect allows the server to define separate authentication scopes under one root URI.[22]

Request messages[]

Request syntax[]

A client sends request messages to the server, which consist of:[23]

- a request line, consisting of the case-sensitive request method, a space, the request target, another space, the protocol version, a carriage return, and a line feed (e.g. GET /images/logo.png HTTP/1.1);

- zero or more request header fields, each consisting of the case-insensitive field name, a colon, optional leading whitespace, the field value, and optional trailing whitespace (e.g. Accept-Language: en), and ending with a carriage return and a line feed;

- an empty line, consisting of a carriage return and a line feed;

- an optional message body.

In the HTTP/1.1 protocol, all header fields except Host are optional.

A request line containing only the path name is accepted by servers to maintain compatibility with HTTP clients before the HTTP/1.0 specification in RFC 1945.[24]

Request methods[]

HTTP defines methods (sometimes referred to as verbs, but nowhere in the specification does it mention verb, nor is OPTIONS or HEAD a verb) to indicate the desired action to be performed on the identified resource. What this resource represents, whether pre-existing data or data that is generated dynamically, depends on the implementation of the server. Often, the resource corresponds to a file or the output of an executable residing on the server. The HTTP/1.0 specification[25] defined the GET, HEAD and POST methods, and the HTTP/1.1 specification[26] added five new methods: PUT, DELETE, CONNECT, OPTIONS, and TRACE. By being specified in these documents, their semantics are well-known and can be depended on. Any client can use any method and the server can be configured to support any combination of methods. If a method is unknown to an intermediate, it will be treated as an unsafe and non-idempotent method. There is no limit to the number of methods that can be defined and this allows for future methods to be specified without breaking existing infrastructure. For example, WebDAV defined seven new methods and RFC 5789 specified the PATCH method.

Method names are case sensitive.[27][28] This is in contrast to HTTP header field names which are case-insensitive.[29]

- GET

- The GET method requests that the target resource transfers a representation of its state. GET requests should only retrieve data and should have no other effect. (This is also true of some other HTTP methods.)[1] The W3C has published guidance principles on this distinction, saying, "Web application design should be informed by the above principles, but also by the relevant limitations."[30] See safe methods below.

- HEAD

- The HEAD method requests that the target resource transfers a representation of its state, like for a GET request, but without the representation data enclosed in the response body. This is useful for retrieving the representation metadata in the response header, without having to transfer the entire representation.

- POST

- The POST method requests that the target resource processes the representation enclosed in the request according to the semantics of the target resource. For example, it is used for posting a message to an Internet forum, subscribing to a mailing list, or completing an online shopping transaction.[31]

- PUT

- The PUT method requests that the target resource creates or updates its state with the state defined by the representation enclosed in the request.[32]

- DELETE

- The DELETE method requests that the target resource deletes its state.

- CONNECT

- The CONNECT method request that the intermediary establishes a TCP/IP tunnel to the origin server identified by the request target. It is often used to secure connections through one or more HTTP proxies with TLS.[33][34][35] See HTTP CONNECT method.

- OPTIONS

- The OPTIONS method requests that the target resource transfers the HTTP methods that it supports. This can be used to check the functionality of a web server by requesting '*' instead of a specific resource.

- TRACE

- The TRACE method requests that the target resource transfers the received request in the response body. That way a client can see what (if any) changes or additions have been made by intermediaries.

- PATCH

- The PATCH method requests that the target resource modifies its state according to the partial update defined in the representation enclosed in the request.[36]

All general-purpose HTTP servers are required to implement at least the GET and HEAD methods, and all other methods are considered optional by the specification.[37]

| Request method | RFC | Request has payload body | Response has payload body | Safe | Idempotent | Cacheable |

|---|---|---|---|---|---|---|

| GET | RFC 7231 | Optional | Yes | Yes | Yes | Yes |

| HEAD | RFC 7231 | Optional | No | Yes | Yes | Yes |

| POST | RFC 7231 | Yes | Yes | No | No | Yes |

| PUT | RFC 7231 | Yes | Yes | No | Yes | No |

| DELETE | RFC 7231 | Optional | Yes | No | Yes | No |

| CONNECT | RFC 7231 | Optional | Yes | No | No | No |

| OPTIONS | RFC 7231 | Optional | Yes | Yes | Yes | No |

| TRACE | RFC 7231 | No | Yes | Yes | Yes | No |

| PATCH | RFC 5789 | Yes | Yes | No | No | No |

Safe methods[]

A request method is safe if a request with that method has no intended effect on the server. The methods GET, HEAD, OPTIONS, and TRACE are defined as safe. In other words, safe methods are intended to be read-only. They do not exclude side effects though, such as appending request information to a log file or charging an advertising account, since they are not requested by the client, by definition.

In contrast, the methods POST, PUT, DELETE, CONNECT, and PATCH are not safe. They may modify the state of the server or have other effects such as sending an email. Such methods are therefore not usually used by conforming web robots or web crawlers; some that do not conform tend to make requests without regard to context or consequences.

Despite the prescribed safety of GET requests, in practice their handling by the server is not technically limited in any way. Therefore, careless or deliberate programming can cause non-trivial changes on the server. This is discouraged, because it can cause problems for web caching, search engines and other automated agents, which can make unintended changes on the server. For example, a website might allow deletion of a resource through a URL such as https://example.com/article/1234/delete, which, if arbitrarily fetched, even using GET, would simply delete the article.[38]

One example of this occurring in practice was during the short-lived Google Web Accelerator beta, which prefetched arbitrary URLs on the page a user was viewing, causing records to be automatically altered or deleted en masse. The beta was suspended only weeks after its first release, following widespread criticism.[39][38]

Idempotent methods[]

A request method is idempotent if multiple identical requests with that method have the same intended effect as a single such request. The methods PUT and DELETE, and safe methods are defined as idempotent.

In contrast, the methods POST, CONNECT, and PATCH are not necessarily idempotent, and therefore sending an identical POST request multiple times may further modify the state of the server or have further effects such as sending an email. In some cases this may be desirable, but in other cases this could be due to an accident, such as when a user does not realize that their action will result in sending another request, or they did not receive adequate feedback that their first request was successful. While web browsers may show alert dialog boxes to warn users in some cases where reloading a page may re-submit a POST request, it is generally up to the web application to handle cases where a POST request should not be submitted more than once.

Note that whether a method is idempotent is not enforced by the protocol or web server. It is perfectly possible to write a web application in which (for example) a database insert or other non-idempotent action is triggered by a GET or other request. Ignoring this recommendation, however, may result in undesirable consequences, if a user agent assumes that repeating the same request is safe when it is not.

Cacheable methods[]

A request method is cacheable if responses to requests with that method may be stored for future reuse. The methods GET, HEAD, and POST are defined as cacheable.

In contrast, the methods PUT, DELETE, CONNECT, OPTIONS, TRACE, and PATCH are not cacheable.

Request header fields[]

Request header fields allow the client to pass additional information beyond the request line, acting as request modifiers (similarly to the parameters of a procedure). They give information about the client, about the target resource, or about the expected handling of the request.

Response messages[]

Response syntax[]

A server sends response messages to the client, which consist of:[23]

- a status line, consisting of the protocol version, a space, the response status code, another space, a possibly empty reason phrase, a carriage return, and a line feed (e.g. HTTP/1.1 200 OK);

- zero or more response header fields, each consisting of the case-insensitive field name, a colon, optional leading whitespace, the field value, and optional trailing whitespace (e.g. Content-Type: text/html), and ending with a carriage return and a line feed;

- an empty line, consisting of a carriage return and a line feed;

- an optional message body.

Response status codes[]

In HTTP/1.0 and since, the first line of the HTTP response is called the status line and includes a numeric status code (such as "404") and a textual reason phrase (such as "Not Found"). The response status code is a three-digit integer code representing the result of the server's attempt to understand and satisfy the client's corresponding request. The way the client handles the response depends primarily on the status code, and secondarily on the other response header fields. Clients may not understand all registered status codes but they must understand their class (given by the first digit of the status code) and treat an unrecognized status code as being equivalent to the x00 status code of that class.

The standard reason phrases are only recommendations, and can be replaced with "local equivalents" at the web developer's discretion. If the status code indicated a problem, the user agent might display the reason phrase to the user to provide further information about the nature of the problem. The standard also allows the user agent to attempt to interpret the reason phrase, though this might be unwise since the standard explicitly specifies that status codes are machine-readable and reason phrases are human-readable.

The first digit of the status code defines its class:

1XX(informational)- The request was received, continuing process.

2XX(successful)- The request was successfully received, understood, and accepted.

3XX(redirection)- Further action needs to be taken in order to complete the request.

4XX(client error)- The request contains bad syntax or cannot be fulfilled.

5XX(server error)- The server failed to fulfill an apparently valid request.

Response header fields[]

The response header fields allow the server to pass additional information beyond the status line, acting as response modifiers. They give information about the server or about further access to the target resource or related resources.

Each response header field has a defined meaning which can be further refined by the semantics of the request method or response status code.

Encrypted connections[]

The most popular way of establishing an encrypted HTTP connection is HTTPS.[40] Two other methods for establishing an encrypted HTTP connection also exist: Secure Hypertext Transfer Protocol, and using the HTTP/1.1 Upgrade header to specify an upgrade to TLS. Browser support for these two is, however, nearly non-existent.[41][42][43]

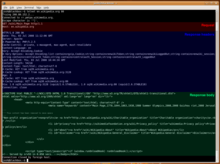

Example session[]

Below is a sample conversation between an HTTP client and an HTTP server running on www.example.com, port 80.

Client request[]

GET / HTTP/1.1

Host: www.example.com

A client request (consisting in this case of the request line and only one header field) is followed by a blank line, so that the request ends with a double newline, each in the form of a carriage return followed by a line feed. The "Host" field distinguishes between various DNS names sharing a single IP address, allowing name-based virtual hosting. While optional in HTTP/1.0, it is mandatory in HTTP/1.1. (A "/" (slash) will usually fetch a /index.html file if there is one.)

Server response[]

HTTP/1.1 200 OK

Date: Mon, 23 May 2005 22:38:34 GMT

Content-Type: text/html; charset=UTF-8

Content-Length: 155

Last-Modified: Wed, 08 Jan 2003 23:11:55 GMT

Server: Apache/1.3.3.7 (Unix) (Red-Hat/Linux)

ETag: "3f80f-1b6-3e1cb03b"

Accept-Ranges: bytes

Connection: close

<html>

<head>

<title>An Example Page</title>

</head>

<body>

<p>Hello World, this is a very simple HTML document.</p>

</body>

</html>

The ETag (entity tag) header field is used to determine if a cached version of the requested resource is identical to the current version of the resource on the server. Content-Type specifies the Internet media type of the data conveyed by the HTTP message, while Content-Length indicates its length in bytes. The HTTP/1.1 webserver publishes its ability to respond to requests for certain byte ranges of the document by setting the field Accept-Ranges: bytes. This is useful, if the client needs to have only certain portions[44] of a resource sent by the server, which is called byte serving. When Connection: close is sent, it means that the web server will close the TCP connection immediately after the transfer of this response.

Most of the header lines are optional. When Content-Length is missing the length is determined in other ways. Chunked transfer encoding uses a chunk size of 0 to mark the end of the content. Identity encoding without Content-Length reads content until the socket is closed.

A Content-Encoding like gzip can be used to compress the transmitted data.

Similar protocols[]

- The Gopher protocol is a content delivery protocol that was displaced by HTTP in the early 1990s.

- The SPDY protocol is an alternative to HTTP developed at Google, superseded by HTTP/2.

- The Gemini protocol is a Gopher-inspired protocol which mandates privacy-related features.

See also[]

| HTTP |

|---|

|

| Request methods |

|

| Header fields |

|

| Response status codes |

| Security access control methods |

|

| Security vulnerabilities |

- Comparison of file transfer protocols

- Constrained Application Protocol – a semantically similar protocol to HTTP but used UDP or UDP-like messages targeted for devices with limited processing capability; re-uses HTTP and other internet concepts like Internet media type and web linking (RFC 5988)[45]

- Content negotiation

- Digest access authentication

- HTTP compression

- HTTP/2 – developed by the IETF's Hypertext Transfer Protocol (httpbis) working group[46]

- List of HTTP header fields

- List of HTTP status codes

- Representational state transfer (REST)

- Variant object

- Web cache

- WebSocket

References[]

- ^ Jump up to: a b c Fielding, Roy T.; Gettys, James; Mogul, Jeffrey C.; Nielsen, Henrik Frystyk; Masinter, Larry; Leach, Paul J.; Berners-Lee, Tim (June 1999). Hypertext Transfer Protocol – HTTP/1.1. IETF. doi:10.17487/RFC2616. RFC 2616.

- ^ In RFC 2068. That specification was obsoleted by RFC 2616 in 1999, which was likewise replaced by RFC 7230 in 2014.

- ^ "Can I use... Support tables for HTML5, CSS3, etc". caniuse.com. Retrieved 2020-06-02.

- ^ "Transport Layer Security (TLS) Application-Layer Protocol Negotiation Extension". IETF. July 2014. RFC 7301.

- ^ Belshe, M.; Peon, R.; Thomson, M. "Hypertext Transfer Protocol Version 2, Use of TLS Features". Retrieved 2015-02-10.

- ^ Benjamin, David. "Using TLS 1.3 with HTTP/2". tools.ietf.org. Retrieved 2020-06-02.

This lowers the barrier for deploying TLS 1.3, a major security improvement over TLS 1.2.

- ^ Bishop, Mike (February 2, 2021). "Hypertext Transfer Protocol Version 3 (HTTP/3)". tools.ietf.org. Retrieved 2021-04-07.

- ^ Cimpanu, Catalin. "HTTP-over-QUIC to be renamed HTTP/3 | ZDNet". ZDNet. Retrieved 2018-11-19.

- ^ Cimpanu, Catalin (26 September 2019). "Cloudflare, Google Chrome, and Firefox add HTTP/3 support". ZDNet. Retrieved 27 September 2019.

- ^ "HTTP/3: the past, the present, and the future". The Cloudflare Blog. 2019-09-26. Retrieved 2019-10-30.

- ^ "Firefox Nightly supports HTTP 3 - General - Cloudflare Community". 2019-11-19. Retrieved 2020-01-23.

- ^ "Overall Operation". RFC 2616. p. 12. sec. 1.4. doi:10.17487/RFC2616. RFC 2616.

- ^ "Classic HTTP Documents". W3.org. 1998-05-14. Retrieved 2010-08-01.

- ^ "Invention Of The Web, Web History, Who Invented the Web, Tim Berners-Lee, Robert Cailliau, CERN, First Web Server". LivingInternet. Retrieved 2021-08-11.

- ^ Berners-Lee, Tim (1990-10-02). "daemon.c - TCP/IP based server for HyperText". www.w3.org. Retrieved 2021-08-11.

- ^ Berners-Lee, Tim. "HyperText Transfer Protocol". World Wide Web Consortium. Retrieved 31 August 2010.

- ^ Tim Berners-Lee. "The Original HTTP as defined in 1991". World Wide Web Consortium. Retrieved 24 July 2010.

- ^ Raggett, Dave. "Dave Raggett's Bio". World Wide Web Consortium. Retrieved 11 June 2010.

- ^ Raggett, Dave; Berners-Lee, Tim. "Hypertext Transfer Protocol Working Group". World Wide Web Consortium. Retrieved 29 September 2010.

- ^ Raggett, Dave. "HTTP WG Plans". World Wide Web Consortium. Retrieved 29 September 2010.

- ^ "HTTP/1.1". Webcom.com Glossary entry. Archived from the original on 2001-11-21. Retrieved 2009-05-29.

- ^ Jump up to: a b Fielding, Roy T.; Reschke, Julian F. (June 2014). Hypertext Transfer Protocol (HTTP/1.1): Authentication. IETF. doi:10.17487/RFC7235. RFC 7235.

- ^ Jump up to: a b "Message format". RFC 7230: HTTP/1.1 Message Syntax and Routing. p. 19. sec. 3. doi:10.17487/RFC7230. RFC 7230.

- ^ "Apache Week. HTTP/1.1". 090502 apacheweek.com

- ^ Berners-Lee, Tim; Fielding, Roy T.; Nielsen, Henrik Frystyk. "Method Definitions". Hypertext Transfer Protocol – HTTP/1.0. IETF. pp. 30–32. sec. 8. doi:10.17487/RFC1945. RFC 1945.

- ^ "Method Definitions". RFC 2616. pp. 51–57. sec. 9. doi:10.17487/RFC2616. RFC 2616.

- ^ "RFC-7210 section 3.1.1". Tools.ietf.org. Retrieved 2019-06-26.

- ^ "RFC-7231 section 4.1". Tools.ietf.org. Retrieved 2019-06-26.

- ^ "RFC-7230 section 3.2". Tools.ietf.org. Retrieved 2019-06-26.

- ^ Jacobs, Ian (2004). "URIs, Addressability, and the use of HTTP GET and POST". Technical Architecture Group finding. W3C. Retrieved 26 September 2010.

- ^ "POST". RFC 2616. p. 54. sec. 9.5. doi:10.17487/RFC2616. RFC 2616.

- ^ "PUT". RFC 2616. p. 55. sec. 9.6. doi:10.17487/RFC2616. RFC 2616.

- ^ "CONNECT". Hypertext Transfer Protocol – HTTP/1.1. IETF. June 1999. p. 57. sec. 9.9. doi:10.17487/RFC2616. RFC 2616. Retrieved 23 February 2014.

- ^ Khare, Rohit; Lawrence, Scott (May 2000). Upgrading to TLS Within HTTP/1.1. IETF. doi:10.17487/RFC2817. RFC 2817.

- ^ "Vulnerability Note VU#150227: HTTP proxy default configurations allow arbitrary TCP connections". US-CERT. 2002-05-17. Retrieved 2007-05-10.

- ^ Dusseault, Lisa; Snell, James M. (March 2010). PATCH Method for HTTP. IETF. doi:10.17487/RFC5789. RFC 5789.

- ^ "Method". RFC 2616. p. 36. sec. 5.1.1. doi:10.17487/RFC2616. RFC 2616.

- ^ Jump up to: a b Ediger, Brad (2007-12-21). Advanced Rails: Building Industrial-Strength Web Apps in Record Time. O'Reilly Media, Inc. p. 188. ISBN 978-0596519728.

A common mistake is to use GET for an action that updates a resource. [...] This problem came into the Rails public eye in 2005, when the Google Web Accelerator was released.

- ^ Cantrell, Christian (2005-06-01). "What Have We Learned From the Google Web Accelerator?". Adobe Blogs. Adobe. Archived from the original on 2017-08-19. Retrieved 2018-11-19.

- ^ Canavan, John (2001). Fundamentals of Networking Security. Norwood, MA: Artech House. pp. 82–83. ISBN 9781580531764.

- ^ Zalewski, Michal. "Browser Security Handbook". Retrieved 30 April 2015.

- ^ "Chromium Issue 4527: implement RFC 2817: Upgrading to TLS Within HTTP/1.1". Retrieved 30 April 2015.

- ^ "Mozilla Bug 276813 – [RFE] Support RFC 2817 / TLS Upgrade for HTTP 1.1". Retrieved 30 April 2015.

- ^ Luotonen, Ari; Franks, John (February 22, 1996). Byte Range Retrieval Extension to HTTP. IETF. I-D draft-ietf-http-range-retrieval-00.

- ^ Nottingham, Mark (October 2010). Web Linking. IETF. doi:10.17487/RFC5988. RFC 5988.

- ^ "Hypertext Transfer Protocol Bis (httpbis) – Charter". IETF. 2012.

External links[]

| Wikimedia Commons has media related to Hypertext Transfer Protocol. |

- "Change History for HTTP". W3.org. Retrieved 2010-08-01. A detailed technical history of HTTP.

- "Design Issues for HTTP". W3.org. Retrieved 2010-08-01. Design Issues by Berners-Lee when he was designing the protocol.

- HTTP 0.9 – As Implemented in 1991

- Hypertext Transfer Protocol

- Application layer protocols

- Computer-related introductions in 1991

- Internet protocols

- Network booting

- Network protocols

- World Wide Web

- World Wide Web Consortium standards