Digital Revolution

| History of technology |

|---|

The Digital Revolution (also known as the Third Industrial Revolution) is the shift from mechanical and analogue electronic technology to digital electronics which began in the latter half of the 20th century, with the adoption and proliferation of digital computers and digital record-keeping, that continues to the present day.[1] Implicitly, the term also refers to the sweeping changes brought about by digital computing and communication technologies during this period. From analogous to the Agricultural Revolution and Industrial Revolution, the Digital Revolution marked the beginning of the Information Age.[2]

Central to this revolution is the mass production and widespread use of digital logic, MOSFETs (MOS transistors), integrated circuit (IC) chips, and their derived technologies, including computers, microprocessors, digital cellular phones, and the Internet.[3] These technological innovations have transformed traditional production and business techniques.[4]

History[]

Brief history[]

The underlying technology was invented in the later quarter of the 19th century, including Babbage's Analytical Engine and the telegraph. Digital communication became economical for widespread adoption after the invention of the personal computer. Claude Shannon, a Bell Labs mathematician, is credited for having laid out the foundations of digitalization in his pioneering 1948 article, A Mathematical Theory of Communication.[5] The digital revolution converted technology from analog format to digital format. By doing this, it became possible to make copies that were identical to the original. In digital communications, for example, repeating hardware was able to amplify the digital signal and pass it on with no loss of information in the signal. Of equal importance to the revolution was the ability to easily move the digital information between media, and to access or distribute it remotely.

The turning point of the revolution was the change from analogue to digitally recorded music.[6] During the 1980s the digital format of optical compact discs gradually replaced analog formats, such as vinyl records and cassette tapes, as the popular medium of choice.[7]

1947–1969: Origins[]

In 1947, the first working transistor, the germanium-based point-contact transistor, was invented by John Bardeen and Walter Houser Brattain while working under William Shockley at Bell Labs.[8] This led the way to more advanced digital computers. From the late 1940s, universities, military, and businesses developed computer systems to digitally replicate and automate previously manually performed mathematical calculations, with the LEO being the first commercially available general-purpose computer.

Other important technological developments included the invention of the monolithic integrated circuit chip by Robert Noyce at Fairchild Semiconductor in 1959[9] (made possible by the planar process developed by Jean Hoerni),[10] the first successful metal-oxide-semiconductor field-effect transistor (MOSFET, or MOS transistor) by Mohamed Atalla and Dawon Kahng at Bell Labs in 1959,[11] and the development of the complementary MOS (CMOS) process by Frank Wanlass and Chih-Tang Sah at Fairchild in 1963.[12]

Following the development of MOS integrated circuit chips in the early 1960s, MOS chips reached higher transistor density and lower manufacturing costs than bipolar integrated circuits by 1964. MOS chips further increased in complexity at a rate predicted by Moore's law, leading to large-scale integration (LSI) with hundreds of transistors on a single MOS chip by the late 1960s. The application of MOS LSI chips to computing was the basis for the first microprocessors, as engineers began recognizing that a complete computer processor could be contained on a single MOS LSI chip.[13] In 1968, Fairchild engineer Federico Faggin improved MOS technology with his development of the silicon-gate MOS chip, which he later used to develop the Intel 4004, the first single-chip microprocessor.[14] It was released by Intel in 1971, and laid the foundations for the microcomputer revolution that began in the 1970s.

MOS technology also led to the development of semiconductor image sensors suitable for digital cameras.[15] The first such image sensor was the charge-coupled device, developed by Willard S. Boyle and George E. Smith at Bell Labs in 1969,[16] based on MOS capacitor technology.[15]

1969–1989: Invention of the Internet, rise of home computers[]

The public was first introduced to the concepts that led to the Internet when a message was sent over the ARPANET in 1969. Packet switched networks such as ARPANET, Mark I, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, in which multiple separate networks could be joined together into a network of networks.

The Whole Earth movement of the 1960s advocated the use of new technology.[17]

In the 1970s, the home computer was introduced,[18] time-sharing computers,[19] the video game console, the first coin-op video games,[20][21] and the golden age of arcade video games began with Space Invaders. As digital technology proliferated, and the switch from analog to digital record keeping became the new standard in business, a relatively new job description was popularized, the data entry clerk. Culled from the ranks of secretaries and typists from earlier decades, the data entry clerk's job was to convert analog data (customer records, invoices, etc.) into digital data.

In developed nations, computers achieved semi-ubiquity during the 1980s as they made their way into schools, homes, business, and industry. Automated teller machines, industrial robots, CGI in film and television, electronic music, bulletin board systems, and video games all fueled what became the zeitgeist of the 1980s. Millions of people purchased home computers, making household names of early personal computer manufacturers such as Apple, Commodore, and Tandy. To this day the Commodore 64 is often cited as the best selling computer of all time, having sold 17 million units (by some accounts)[22] between 1982 and 1994.

In 1984, the U.S. Census Bureau began collecting data on computer and Internet use in the United States; their first survey showed that 8.2% of all U.S. households owned a personal computer in 1984, and that households with children under the age of 18 were nearly twice as likely to own one at 15.3% (middle and upper middle class households were the most likely to own one, at 22.9%).[23] By 1989, 15% of all U.S. households owned a computer, and nearly 30% of households with children under the age of 18 owned one.[24] By the late 1980s, many businesses were dependent on computers and digital technology.

Motorola created the first mobile phone, Motorola DynaTac, in 1983. However, this device used analog communication - digital cell phones were not sold commercially until 1991 when the 2G network started to be opened in Finland to accommodate the unexpected demand for cell phones that was becoming apparent in the late 1980s.

Compute! magazine predicted that CD-ROM would be the centerpiece of the revolution, with multiple household devices reading the discs.[25]

The first true digital camera was created in 1988, and the first were marketed in December 1989 in Japan and in 1990 in the United States.[26] By the mid-2000s, they had eclipsed traditional film in popularity.

Digital ink was also invented in the late 1980s. Disney's CAPS system (created 1988) was used for a scene in 1989's The Little Mermaid and for all their animation films between 1990's The Rescuers Down Under and 2004's Home on the Range.

1989–2005: Invention of the World Wide Web, mainstreaming of the Internet, Web 1.0[]

Tim Berners-Lee invented the World Wide Web in 1989.

The first public digital HDTV broadcast was of the 1990 World Cup that June; it was played in 10 theaters in Spain and Italy. However, HDTV did not become a standard until the mid-2000s outside Japan.

The World Wide Web became publicly accessible in 1991, which had been available only to government and universities.[27] In 1993 Marc Andreessen and Eric Bina introduced Mosaic, the first web browser capable of displaying inline images[28] and the basis for later browsers such as Netscape Navigator and Internet Explorer. Stanford Federal Credit Union was the first financial institution to offer online internet banking services to all of its members in October 1994.[29] In 1996 OP Financial Group, also a cooperative bank, became the second online bank in the world and the first in Europe.[30] The Internet expanded quickly, and by 1996, it was part of mass culture and many businesses listed websites in their ads. By 1999, almost every country had a connection, and nearly half of Americans and people in several other countries used the Internet on a regular basis. However throughout the 1990s, "getting online" entailed complicated configuration, and dial-up was the only connection type affordable by individual users; the present day mass Internet culture was not possible.

In 1989, about 15% of all households in the United States owned a personal computer.[31] For households with children, nearly 30% owned a computer in 1989, and in 2000, 65% owned one.

Cell phones became as ubiquitous as computers by the early 2000s, with movie theaters beginning to show ads telling people to silence their phones. They also became much more advanced than phones of the 1990s, most of which only took calls or at most allowed for the playing of simple games.

Text messaging existed in the 1990s but was not widely used until the early 2000s, when it became a cultural phenomenon.

The digital revolution became truly global in this time as well - after revolutionizing society in the developed world in the 1990s, the digital revolution spread to the masses in the developing world in the 2000s.

By 2000, a majority of U.S. households had at least one personal computer and internet access the following year.[32] In 2002, a majority of U.S. survey respondents reported having a mobile phone.[33]

2005–present: Web 2.0, social media, smartphones, digital TV[]

In late 2005 the population of the Internet reached 1 billion,[34] and 3 billion people worldwide used cell phones by the end of the decade. HDTV became the standard television broadcasting format in many countries by the end of the decade. In September and December 2006 respectively, Luxembourg and the Netherlands became the first countries to completely transition from analog to digital television. In September 2007, a majority of U.S. survey respondents reported having broadband internet at home.[35] According to estimates from the Nielsen Media Research, approximately 45.7 million U.S. households in 2006 (or approximately 40 percent of approximately 114.4 million) owned a dedicated home video game console,[36][37] and by 2015, 51 percent of U.S. households owned a dedicated home video game console according to an Entertainment Software Association annual industry report.[38][39] By 2012, over 2 billion people used the Internet, twice the number using it in 2007. Cloud computing had entered the mainstream by the early 2010s. In January 2013, a majority of U.S. survey respondents reported owning a smartphone.[40] By 2016, half of the world's population was connected[41] and as of 2020, that number has risen to 67%.[42]

Rise in digital technology use of computers, 1980–2020[]

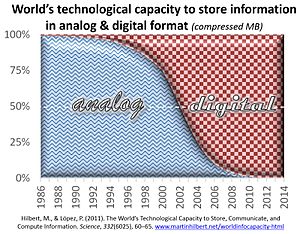

In the late 1980s, less than 1% of the world's technologically stored information was in digital format, while it was 94% in 2007, with more than 99% by 2014.[43]

It is estimated that the world's capacity to store information has increased from 2.6 (optimally compressed) exabytes in 1986, to some 5,000 exabytes in 2014 (5 zettabytes).[43][44]

1990[]

- Cell phone subscribers: 12.5 million (0.25% of world population in 1990)[45]

- Internet users: 2.8 million (0.05% of world population in 1990)[46]

2000[]

- Cell phone subscribers: 1.5 billion (19% of world population in 2002)[46]

- Internet users: 631 million (11% of world population in 2002)[46]

2010[]

- Cell phone subscribers: 4 billion (68% of world population in 2010)[47]

- Internet users: 1.8 billion (26.6% of world population in 2010)[41]

2020[]

- Cell phone subscribers: 4.78 billion (62% of world population in 2020)[48]

- Internet users: 4.54 billion (59% of world population in 2020)[49]

Converted technologies[]

This section does not cite any sources. (April 2020) |

Conversion of below analog technologies to digital. (The decade indicated is the period when digital became dominant form.)

- Analog computer to digital computer (1950s)

- Telex to fax (1980s)

- Phonograph cylinder, gramophone record and compact cassette to compact disc (1980s and 1990s, although sales of vinyl records have increased again in the 2010s among antique collectors)

- VHS to DVD (2000s)

- Analog photography (photographic plate and photographic film) to digital photography (2000s)

- Analog cinematography (film stock) to digital cinematography (2010s)

- Analog television to digital television (2010s)

Image of Compact Disc

Image of Compact Disc - Analog radio to digital radio (2020s (expected))

- Analog mobile phone (1G) to digital mobile phone (2G) (1990s)

- Analog watch and clock to digital watch and clock (not yet predictable)

- Analog thermometer to digital thermometer (2010s)

- Offset printing to digital printing (2020s (expected))

Decline or disappearance of below analog technologies:

- Mail (parcel to continue, others to be discontinued) (2020s (expected))[citation needed]

- Telegram (2010s)

- Typewriter (2010s)

Disappearance of other technologies also attributed to digital revolution. (Analog–digital classification doesn't apply to these.)

- CRT (2010s)

- Plasma display (2010s)

- CCFL backlit LCDs (2010s)

Improvements in digital technologies.

- Desktop computer to laptop to tablet computer

- DVD to Blu-ray Disc to 4K Blu-ray Disc

- 2G to 3G to 4G to 5G

- Mobile phone to smartphone (2010s)

- Digital watch to smartwatch

- Analog weighing scale to digital weighing scale

Technological basis[]

The basic building block of the Digital Revolution is the metal-oxide-semiconductor field-effect transistor (MOSFET, or MOS transistor),[50] which is the most widely manufactured device in history.[51] It is the basis of every microprocessor, memory chip and telecommunication circuit in commercial use.[52] MOSFET scaling (rapid miniaturization of MOS transistors) has been largely responsible for enabling Moore's law, which predicted that transistor counts would increase at an exponential pace.[53][54][55]

Following the development of the digital personal computer, MOS microprocessors and memory chips, with their steadily increasing performance and storage, have enabled computer technology to be embedded into a huge range of objects from cameras to personal music players. Also important was the development of transmission technologies including computer networking, the Internet and digital broadcasting. 3G phones, whose social penetration grew exponentially in the 2000s, also played a very large role in the digital revolution as they simultaneously provide ubiquitous entertainment, communications, and online connectivity.

Socio-economic impact[]

Positive aspects include greater interconnectedness, easier communication, and the exposure of information that in the past could have more easily been suppressed by totalitarian regimes. Michio Kaku wrote in his books Physics of the Future that the failure of the Soviet coup of 1991 was due largely to the existence of technology such as the fax machine and computers that exposed classified information.

The Revolutions of 2011 were enabled by social networking and smartphone technology; however these revolutions in hindsight largely failed to reach their goals as hardcore Islamist governments and in Syria a civil war have formed in the absence of the dictatorships that were toppled.

The economic impact of the digital revolution has been wide-ranging. Without the World Wide Web (WWW), for example, globalization and outsourcing would not be nearly as feasible as they are today. The digital revolution radically changed the way individuals and companies interact. Small regional companies were suddenly given access to much larger markets. Concepts such as on-demand software services and manufacturing and rapidly dropping technology costs made possible innovations in all aspects of industry and everyday life.

After initial concerns of an IT productivity paradox, evidence is mounting that digital technologies have significantly increased the productivity and performance of businesses.[56]

The Digital transformation allowed technology to continuously adapt which resulted in a boost in the economy with an increase of productivity. With the increase of technical advances, digital revolution has created a demand for new job skills. Economically, retailers, trucking companies and banks have transitioned into digital format. In addition, the introduction of cryptocurrency like Bitcoin creates faster and secure transactions.[57]

Negative effects include information overload, Internet predators, forms of social isolation, and media saturation. In a poll of prominent members of the national news media, 65 percent said the Internet is hurting journalism more than it is helping[58] by allowing anyone no matter how amateur and unskilled to become a journalist; causing information to be muddier and the rise of conspiracy theory in a way it didn't exist in the past.

In some cases, company employees' pervasive use of portable digital devices and work related computers for personal use—email, instant messaging, computer games—were often found to, or perceived to, reduce those companies' productivity. Personal computing and other non-work related digital activities in the workplace thus helped lead to stronger forms of privacy invasion, such as keystroke recording and information filtering applications (spyware and content-control software).

Information sharing and privacy[]

Privacy in general became a concern during the digital revolution. The ability to store and utilize such large amounts of diverse information opened possibilities for tracking of individual activities and interests. Libertarians and privacy rights advocates feared the possibility of an Orwellian future where centralized power structures control the populace via automatic surveillance and monitoring of personal information in such programs as the CIA's Information Awareness Office.[59] Consumer and labor advocates opposed the ability to direct market to individuals, discriminate in hiring and lending decisions, invasively monitor employee behavior and communications and generally profit from involuntarily shared personal information.

The Internet, especially the WWW in the 1990s, opened whole new avenues for communication and information sharing. The ability to easily and rapidly share information on a global scale brought with it a whole new level of freedom of speech. Individuals and organizations were suddenly given the ability to publish on any topic, to a global audience, at a negligible cost, particularly in comparison to any previous communication technology.

Large cooperative projects could be endeavored (e.g. Open-source software projects, SETI@home). Communities of like-minded individuals were formed (e.g. MySpace, Tribe.net). Small regional companies were suddenly given access to a larger marketplace.

In other cases, special interest groups as well as social and religious institutions found much of the content objectionable, even dangerous. Many parents and religious organizations, especially in the United States, became alarmed by pornography being more readily available to minors. In other circumstances the proliferation of information on such topics as child pornography, building bombs, committing acts of terrorism, and other violent activities were alarming to many different groups of people. Such concerns contributed to arguments for censorship and regulation on the WWW.

Copyright and trademark issues[]

Copyright and trademark issues also found new life in the digital revolution. The widespread ability of consumers to produce and distribute exact reproductions of protected works dramatically changed the intellectual property landscape, especially in the music, film, and television industries.

The digital revolution, especially regarding privacy, copyright, censorship and information sharing, remains a controversial topic. As the digital revolution progresses it remains unclear to what extent society has been impacted and will be altered in the future.

With the advancement of digital technology, copyright infringements will become difficult to detect. They will occur more frequently, will be difficult to prove and the public will continue to find loopholes around the law. Digital recorders for example, can be used personally and private use making the distributions of copywritten material discreet.[60]

Concerns[]

While there have been huge benefits to society from the digital revolution, especially in terms of the accessibility of information, there are a number of concerns. Expanded powers of communication and information sharing, increased capabilities for existing technologies, and the advent of new technology brought with it many potential opportunities for exploitation. The digital revolution helped usher in a new age of mass surveillance, generating a range of new civil and human rights issues. Reliability of data became an issue as information could easily be replicated, but not easily verified. For example, the introduction of Cryptocurrency, opens possibility for illegal trade, such as the sale of drugs, guns and black market transaction.[57] The digital revolution made it possible to store and track facts, articles, statistics, as well as minutiae hitherto unfeasible.

From the perspective of the historian, a large part of human history is known through physical objects from the past that have been found or preserved, particularly in written documents. Digital records are easy to create but also easy to delete and modify. Changes in storage formats can make recovery of data difficult or near impossible, as can the storage of information on obsolete media for which reproduction equipment is unavailable, and even identifying what such data is and whether it is of interest can be near impossible if it is no longer easily readable, or if there is a large number of such files to identify. Information passed off as authentic research or study must be scrutinized and verified.[citation needed]

These problems are further compounded by the use of digital rights management and other copy prevention technologies which, being designed to only allow the data to be read on specific machines, may well make future data recovery impossible. The Voyager Golden Record, which is intended to be read by an intelligent extraterrestrial (perhaps a suitable parallel to a human from the distant future), is recorded in analog rather than digital format specifically for easy interpretation and analysis.

See also[]

- Revolution

- Neolithic Revolution

- Agricultural Revolution

- Scientific Revolution

- Industrial Revolution

- Second Industrial Revolution

- Environmental revolution

- Information revolution

- Microcomputer revolution

- Nanotechnology

- Technological revolution

- The Triple Revolution

- Dot-com company

- Digital native

- Digital omnivore

- Digital addict

- Digital phobic

- Electronic document

- Fourth Industrial Revolution

- Great Regression

- Indigo Era

- Japanese economic miracle, a period of rapid growth and innovation in Japan which roughly coincided with the Third Industrial Revolution

- Paperless office

- Post Cold War era

- Telework

- Timeline of electrical and electronic engineering

References[]

- ^ E. Schoenherr, Steven (5 May 2004). "The Digital Revolution". Archived from the original on 7 October 2008.

- ^ "Information Age".

- ^ Debjani, Roy (2014). "Cinema in the Age of Digital Revolution" (PDF).

- ^ Bojanova, Irena (2014). "The Digital Revolution: What's on the Horizon?". IT Professional (Jan.-Feb. 2014). 16 (1): 8–12. doi:10.1109/MITP.2014.11. S2CID 28110209.

- ^ Shannon, Claude E.; Weaver, Warren (1963). The mathematical theory of communication (4. print. ed.). Urbana: University of Illinois Press. p. 144. ISBN 0252725484.

- ^ "Museum Of Applied Arts And Sciences - About". Museum of Applied Arts and Sciences. Retrieved 22 August 2017.

- ^ "The Digital Revolution Ahead for the Audio Industry," Business Week. New York, 16 March 1981, p. 40D.

- ^ Phil Ament (17 April 2015). "Transistor History - Invention of the Transistor". Archived from the original on 13 August 2011. Retrieved 17 April 2015.

- ^ Saxena, Arjun (2009). Invention of Integrated Circuits: Untold Important Facts. pp. x–xi.

- ^ Saxena, Arjun (2009). Invention of Integrated Circuits: Untold Important Facts. pp. 102–103.

- ^ "1960: Metal Oxide Semiconductor (MOS) Transistor Demonstrated".

- ^ "1963: Complementary MOS Circuit Configuration is Invented".

- ^ Shirriff, Ken (30 August 2016). "The Surprising Story of the First Microprocessors". IEEE Spectrum. Institute of Electrical and Electronics Engineers. 53 (9): 48–54. doi:10.1109/MSPEC.2016.7551353. S2CID 32003640. Retrieved 13 October 2019.

- ^ "1971: Microprocessor Integrates CPU Function onto a Single Chip". Computer History Museum.

- ^ Jump up to: a b Williams, J. B. (2017). The Electronics Revolution: Inventing the Future. Springer. pp. 245–8. ISBN 9783319490885.

- ^ James R. Janesick (2001). Scientific charge-coupled devices. SPIE Press. pp. 3–4. ISBN 978-0-8194-3698-6.

- ^ "History of Whole Earth Catalog". Retrieved 17 April 2015.

- ^ "Personal Computer Milestones". Retrieved 17 April 2015.

- ^ Criss, Fillur (14 August 2014). "2,076 IT jobs from 492 companies". ICTerGezocht.nl (in Dutch). Retrieved 19 August 2017.

- ^ "Atari - Arcade/Coin-op". Archived from the original on 2 November 2014. Retrieved 17 April 2015.

- ^ Vincze Miklós. "Forgotten arcade games let you shoot space men and catch live lobsters". io9. Retrieved 17 April 2015.

- ^ "How many Commodore 64 computers were really sold?". pagetable.com. Archived from the original on 6 March 2016. Retrieved 17 April 2015.

- ^ "Archived copy" (PDF). Archived from the original (PDF) on 2 April 2013. Retrieved 20 December 2017.CS1 maint: archived copy as title (link)

- ^ https://www.census.gov/hhes/computer/files/1989/p23-171.pdf

- ^ "COMPUTE! magazine issue 93 Feb 1988". February 1988.

If the wheels behind the CD-ROM industry have their way, this product will help open the door to a brave, new multimedia world for microcomputers, where the computer is intimately linked with the other household electronics, and every gadget in the house reads tons of video, audio, and text data from CD-ROM disks.

- ^ "1988". Retrieved 17 April 2015.

- ^ Martin Bryant (6 August 2011). "20 years ago today, the World Wide Web was born - TNW Insider". The Next Web. Retrieved 17 April 2015.

- ^ "The World Wide Web". Retrieved 17 April 2015.

- ^ "Stanford Federal Credit Union Pioneers Online Financial Services" (Press release). 21 June 1995.

- ^ "History - About us - OP Group".

- ^ https://www.census.gov/prod/2005pubs/p23-208.pdf

- ^ File, Thom (May 2013). Computer and Internet Use in the United States (PDF) (Report). Current Population Survey Reports. Washington, D.C.: U.S. Census Bureau. Retrieved 11 February 2020.

- ^ Tuckel, Peter; O'Neill, Harry (2005). Ownership and Usage Patterns of Cell Phones: 2000-2005 (PDF) (Report). JSM Proceedings, Survey Research Methods Section. Alexandria, VA: American Statistical Association. p. 4002. Retrieved 25 September 2020.

- ^ "One Billion People Online!". Archived from the original on 22 October 2008. Retrieved 17 April 2015.

- ^ "Demographics of Internet and Home Broadband Usage in the United States". Pew Research Center. 7 April 2021. Retrieved 19 May 2021.

- ^ Arendt, Susan (5 March 2007). "Game Consoles in 41% of Homes". WIRED. Condé Nast. Retrieved 29 June 2021.

- ^ Statistical Abstract of the United States: 2008 (PDF) (Report). Statistical Abstract of the United States (127 ed.). U.S. Census Bureau. 30 December 2007. p. 52. Retrieved 29 June 2021.

- ^ North, Dale (14 April 2015). "155M Americans play video games, and 80% of households own a gaming device". VentureBeat. Retrieved 29 June 2021.

- ^ 2015 Essential Facts About the Computer and Video Game Industry (Report). Essential Facts About the Computer and Video Game Industry. 2015. Entertainment Software Association. Retrieved 29 June 2021.

- ^ "Demographics of Mobile Device Ownership and Adoption in the United States". Pew Research Center. 7 April 2021. Retrieved 19 May 2021.

- ^ Jump up to: a b "World Internet Users Statistics and 2014 World Population Stats". Retrieved 17 April 2015.

- ^ Clement. "Worldwide digital population as of April 2020". Statista. Retrieved 21 May 2020.

- ^ Jump up to: a b "The World’s Technological Capacity to Store, Communicate, and Compute Information", especially Supporting online material, Martin Hilbert and Priscila López (2011), Science, 332(6025), 60-65; free access to the article through here: http://www.martinhilbert.net/worldinfocapacity-html/

- ^ Information in the Biosphere: Biological and Digital Worlds, doi:10.1016/j.tree.2015.12.013, Gillings, M. R., Hilbert, M., & Kemp, D. J. (2016), Trends in Ecology & Evolution, 31(3), 180–189

- ^ "Worldmapper: The world as you've never seen it before - Cellular Subscribers 1990". Retrieved 17 April 2015.

- ^ Jump up to: a b c "Worldmapper: The world as you've never seen it before - Communication Maps". Retrieved 17 April 2015.

- ^ Arms, Michael (2013). "Cell Phone Dangers - Protecting Our Homes From Cell Phone Radiation". Computer User. Archived from the original on 29 March 2014.

- ^ "Number of mobile phone users worldwide 2015-2020". Statista. Retrieved 19 February 2020.

- ^ "Global digital population 2020". Statista. Retrieved 19 February 2020.

- ^ Wong, Kit Po (2009). Electrical Engineering - Volume II. EOLSS Publications. p. 7. ISBN 9781905839780.

- ^ "13 Sextillion & Counting: The Long & Winding Road to the Most Frequently Manufactured Human Artifact in History". Computer History Museum. 2 April 2018.

- ^ Colinge, Jean-Pierre; Greer, James C. (2016). Nanowire Transistors: Physics of Devices and Materials in One Dimension. Cambridge University Press. p. 2. ISBN 9781107052406.

- ^ Motoyoshi, M. (2009). "Through-Silicon Via (TSV)". Proceedings of the IEEE. 97 (1): 43–48. doi:10.1109/JPROC.2008.2007462. ISSN 0018-9219. S2CID 29105721.

- ^ "Tortoise of Transistors Wins the Race - CHM Revolution". Computer History Museum. Retrieved 22 July 2019.

- ^ "Transistors Keep Moore's Law Alive". EETimes. 12 December 2018. Retrieved 18 July 2019.

- ^ Hitt, Lorin M.; Brynjolfsson, Erik (June 2003). "Computing Productivity: Firm-Level Evidence". SSRN 290325. Cite journal requires

|journal=(help) - ^ Jump up to: a b Hodson, Richard (28 November 2018). "Digital revolution". Nature. 563 (7733): S131. Bibcode:2018Natur.563S.131H. doi:10.1038/d41586-018-07500-z. PMID 30487631.

- ^ Cyra Master (10 April 2009). "Media Insiders Say Internet Hurts Journalism". The Atlantic. Retrieved 17 April 2015.

- ^ John Markoff (22 November 2002). "Pentagon Plans a Computer System That Would Peek at Personal Data of Americans". The New York Times.

- ^ Fleischmann, Eric (1987). "The Impact of Digital Technology on Copyright Law, 8 Computer L.J. 1". N the John Marshall Journal of Information Technology & Privacy Law. 8.

External links[]

| Wikibooks has a book on the topic of: The Information Age |

- Digital Revolution

- Historical eras

- Revolutions by type

- Information Age