U-Net

| Part of a series on |

| Machine learning and data mining |

|---|

|

U-Net is a convolutional neural network that was developed for biomedical image segmentation at the Computer Science Department of the University of Freiburg.[1] The network is based on the fully convolutional network[2] and its architecture was modified and extended to work with fewer training images and to yield more precise segmentations. Segmentation of a 512 × 512 image takes less than a second on a modern GPU.

Description[]

The U-Net architecture stems from the so-called “fully convolutional network” first proposed by Long, Shelhamer, and Darrell.[2]

The main idea is to supplement a usual contracting network by successive layers, where pooling operations are replaced by upsampling operators. Hence these layers increase the resolution of the output. A successive convolutional layer can then learn to assemble a precise output based on this information.[1]

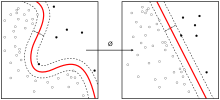

One important modification in U-Net is that there are a large number of feature channels in the upsampling part, which allow the network to propagate context information to higher resolution layers. As a consequence, the expansive path is more or less symmetric to the contracting part, and yields a u-shaped architecture. The network only uses the valid part of each convolution without any fully connected layers.[2] To predict the pixels in the border region of the image, the missing context is extrapolated by mirroring the input image. This tiling strategy is important to apply the network to large images, since otherwise the resolution would be limited by the GPU memory.

History[]

U-Net was created by Olaf Ronneberger, Philipp Fischer, Thomas Brox in 2015 at the paper “U-Net: Convolutional Networks for Biomedical Image Segmentation”.[1] It's an improvement and development of FCN: Evan Shelhamer, Jonathan Long, Trevor Darrell (2014). "Fully convolutional networks for semantic segmentation".[2]

Network architecture[]

The network consists of a contracting path and an expansive path, which gives it the u-shaped architecture. The contracting path is a typical convolutional network that consists of repeated application of convolutions, each followed by a rectified linear unit (ReLU) and a max pooling operation. During the contraction, the spatial information is reduced while feature information is increased. The expansive pathway combines the feature and spatial information through a sequence of up-convolutions and concatenations with high-resolution features from the contracting path.[3]

Applications[]

There are many applications of U-Net in biomedical image segmentation, such as brain image segmentation (''BRATS''[4]) and liver image segmentation ("siliver07"[5]) as well as protein binding site prediction.[6] Variations of the U-Net have also been applied for medical image reconstruction.[7] Here are some variants and applications of U-Net as follows:

- Pixel-wise regression using U-Net and its application on pansharpening;[8]

- 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation;[9]

- TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation.[10]

- Image-to-image translation to estimate fluorescent stains [11]

- In binding site prediction of protein structure.[6]

Implementations[]

jakeret (2017): "Tensorflow Unet"[12]

U-Net source code from Pattern Recognition and Image Processing at Computer Science Department of the University of Freiburg, Germany.[13]

The basic articles on the system[1][2][9][10] have been cited 3693, 7049, 442 and 22 times respectively on Google Scholar as of December 24, 2018.[14]

References[]

- ^ a b c d Ronneberger O, Fischer P, Brox T (2015). "U-Net: Convolutional Networks for Biomedical Image Segmentation". arXiv:1505.04597 [cs.CV].

- ^ a b c d e Shelhamer E, Long J, Darrell T (April 2017). "Fully Convolutional Networks for Semantic Segmentation". IEEE Transactions on Pattern Analysis and Machine Intelligence. 39 (4): 640–651. arXiv:1411.4038. doi:10.1109/TPAMI.2016.2572683. PMID 27244717. S2CID 1629541.

- ^ "U-Net code".

- ^ "MICCAI BraTS 2017: Scope | Section for Biomedical Image Analysis (SBIA) | Perelman School of Medicine at the University of Pennsylvania". www.med.upenn.edu. Retrieved 2018-12-24.

- ^ "SLIVER07 : Home". www.sliver07.org. Retrieved 2018-12-24.

- ^ a b Nazem F, Ghasemi F, Fassihi A, Dehnavi AM (April 2021). "3D U-Net: A voxel-based method in binding site prediction of protein structure". Journal of Bioinformatics and Computational Biology. 19 (2): 2150006. doi:10.1142/S0219720021500062. PMID 33866960.

- ^ Andersson J, Ahlström H, Kullberg J (September 2019). "Separation of water and fat signal in whole-body gradient echo scans using convolutional neural networks". Magnetic Resonance in Medicine. 82 (3): 1177–1186. doi:10.1002/mrm.27786. PMC 6618066. PMID 31033022.

- ^ Yao W, Zeng Z, Lian C, Tang H (2018-10-27). "Pixel-wise regression using U-Net and its application on pansharpening". Neurocomputing. 312: 364–371. doi:10.1016/j.neucom.2018.05.103. ISSN 0925-2312. S2CID 207119255.

- ^ a b Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O (2016). "3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation". arXiv:1606.06650 [cs.CV].

- ^ a b Iglovikov V, Shvets A (2018). "TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation". arXiv:1801.05746 [cs.CV].

- ^ Kandel ME, He YR, Lee YJ, Chen TH, Sullivan KM, Aydin O, et al. (December 2020). "Phase imaging with computational specificity (PICS) for measuring dry mass changes in sub-cellular compartments". Nature Communications. 11 (1): 6256. arXiv:2002.08361. doi:10.1038/s41467-020-20062-x. PMC 7721808. PMID 33288761.

- ^ Akeret J (2018-12-24), Generic U-Net Tensorflow implementation for image segmentation: jakeret/tf_unet, retrieved 2018-12-24

- ^ "U-Net: Convolutional Networks for Biomedical Image Segmentation". lmb.informatik.uni-freiburg.de. Retrieved 2018-12-24.

- ^ U-net Google Scholar citation data

- Deep learning

- Artificial neural networks

- University of Freiburg