Anomaly detection

| Part of a series on |

| Machine learning and data mining |

|---|

|

| Part of a series on |

| Information security |

|---|

|

| Related security categories |

| Threats |

|

| Defenses |

In data analysis, anomaly detection (also outlier detection)[1] is the identification of rare items, events or observations which raise suspicions by differing significantly from the majority of the data.[1] Typically the anomalous items will translate to some kind of problem such as bank fraud, a structural defect, medical problems or errors in a text. Anomalies are also referred to as outliers, novelties, noise, deviations and exceptions.[2]

In particular, in the context of abuse and network intrusion detection, the interesting objects are often not rare objects, but unexpected bursts in activity. This pattern does not adhere to the common statistical definition of an outlier as a rare object, and many outlier detection methods (in particular unsupervised methods) will fail on such data, unless it has been aggregated appropriately. Instead, a cluster analysis algorithm may be able to detect the micro clusters formed by these patterns.[3]

Three broad categories of anomaly detection techniques exist.[4] Unsupervised anomaly detection techniques detect anomalies in an unlabeled test data set under the assumption that the majority of the instances in the data set are normal by looking for instances that seem to fit least to the remainder of the data set. Supervised anomaly detection techniques require a data set that has been labeled as "normal" and "abnormal" and involves training a classifier (the key difference to many other statistical classification problems is the inherent unbalanced nature of outlier detection). Semi-supervised anomaly detection techniques construct a model representing normal behavior from a given normal training data set, and then test the likelihood of a test instance to be generated by the utilized model.

Applications[]

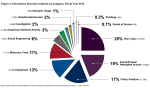

Anomaly detection is applicable in a variety of domains, such as intrusion detection, fraud detection, fault detection, system health monitoring, event detection in sensor networks, detecting ecosystem disturbances, and defect detection in images using machine vision.[5] It is often used in preprocessing to remove anomalous data from the dataset. In supervised learning, removing the anomalous data from the dataset often results in a statistically significant increase in accuracy.[6][7]

Popular techniques[]

Several anomaly detection techniques have been proposed in literature.[8] Some of the popular techniques are:

- Density-based techniques (k-nearest neighbor,[9][10][11] local outlier factor,[12] isolation forests,[13][14] and many more variations of this concept[15]).

- Subspace-,[16] correlation-based[17] and tensor-based [18] outlier detection for high-dimensional data.[19]

- One-class support vector machines.[20]

- Replicator neural networks.,[21] autoencoders, variational autoencoders,[22] long short-term memory neural networks[23]

- Bayesian networks.[21]

- Hidden Markov models (HMMs).[21]

- Cluster analysis-based outlier detection.[24][25]

- Deviations from association rules and frequent itemsets.

- Fuzzy logic-based outlier detection.

- Ensemble techniques, using feature bagging,[26][27] score normalization[28][29] and different sources of diversity.[30][31]

The performance of different methods depends a lot on the data set and parameters, and methods have little systematic advantages over another when compared across many data sets and parameters.[32][33]

Application to data security[]

Anomaly detection was proposed for intrusion detection systems (IDS) by Dorothy Denning in 1986.[34] Anomaly detection for IDS is normally accomplished with thresholds and statistics, but can also be done with soft computing, and inductive learning.[35] Types of statistics proposed by 1999 included profiles of users, workstations, networks, remote hosts, groups of users, and programs based on frequencies, means, variances, covariances, and standard deviations.[36] The counterpart of anomaly detection in intrusion detection is misuse detection.

In data pre-processing[]

In supervised learning, anomaly detection is often an important step in data pre-processing to provide the learning algorithm a proper dataset to learn on. This is also known as Data cleansing. After detecting anomalous samples classifiers remove them, however, at times corrupted data can still provide useful samples for learning. A common method for finding appropriate samples to use is identifying Noisy data. One approach to find noisy values is to create a probabilistic model from data using models of uncorrupted data and corrupted data.[37]

Below is an example of the Iris flower data set with an anomaly added. With an anomaly included, classification algorithm may have difficulties properly finding patterns, or run into errors.

| Dataset order | Sepal length | Sepal width | Petal length | Petal width | Species |

|---|---|---|---|---|---|

| 1 | 5.1 | 3.5 | 1.4 | 0.2 | I. setosa |

| 2 | 4.9 | 3.0 | 1.4 | 0.2 | I. setosa |

| 3 | 4.7 | 3.2 | 1.3 | 0.2 | I. setosa |

| 4 | 4.6 | 3.1 | 1.5 | 0.2 | I. setosa |

| 5 | 5.0 | NULL | 1.4 | NULL | I. setosa |

By removing the anomaly, training will be enabled to find patterns in classifications more easily.

In data mining, high-dimensional data will also propose high computing challenges with intensely large sets of data. By removing numerous samples that can find itself irrelevant to a classifier or detection algorithm, runtime can be significantly reduced on even the largest sets of data.

Software[]

- ELKI is an open-source Java data mining toolkit that contains several anomaly detection algorithms, as well as index acceleration for them.

- Scikit-Learn is an open-source Python library that has built functionality to provide unsupervised anomaly detection.

Datasets[]

- Anomaly detection benchmark data repository of the Ludwig-Maximilians-Universität München; Mirror at University of São Paulo.

- ODDS – ODDS: A large collection of publicly available outlier detection datasets with ground truth in different domains.

- Unsupervised Anomaly Detection Benchmark at Harvard Dataverse: Datasets for Unsupervised Anomaly Detection with ground truth.

- KMASH Data Repository at Research Data Australia having more than 12,000 anomaly detection datasets with ground truth.

See also[]

References[]

- ^ Jump up to: a b Zimek, Arthur; Schubert, Erich (2017), "Outlier Detection", Encyclopedia of Database Systems, Springer New York, pp. 1–5, doi:10.1007/978-1-4899-7993-3_80719-1, ISBN 9781489979933

- ^ Hodge, V. J.; Austin, J. (2004). "A Survey of Outlier Detection Methodologies" (PDF). Artificial Intelligence Review. 22 (2): 85–126. CiteSeerX 10.1.1.318.4023. doi:10.1007/s10462-004-4304-y. S2CID 3330313.

- ^ Dokas, Paul; Ertoz, Levent; Kumar, Vipin; Lazarevic, Aleksandar; Srivastava, Jaideep; Tan, Pang-Ning (2002). "Data mining for network intrusion detection" (PDF). Proceedings NSF Workshop on Next Generation Data Mining.

- ^ Chandola, V.; Banerjee, A.; Kumar, V. (2009). "Anomaly detection: A survey". ACM Computing Surveys. 41 (3): 1–58. doi:10.1145/1541880.1541882. S2CID 207172599.

- ^ Bergmann, Paul; Kilian Batzner; Michael Fauser; David Sattlegger; Carsten Steger (2021). "The MVTec Anomaly Detection Dataset: A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection". International Journal of Computer Vision. 129 (4): 1038–1059. doi:10.1007/s11263-020-01400-4.

- ^ Tomek, Ivan (1976). "An Experiment with the Edited Nearest-Neighbor Rule". IEEE Transactions on Systems, Man, and Cybernetics. 6 (6): 448–452. doi:10.1109/TSMC.1976.4309523.

- ^ Smith, M. R.; Martinez, T. (2011). "Improving classification accuracy by identifying and removing instances that should be misclassified" (PDF). The 2011 International Joint Conference on Neural Networks. p. 2690. CiteSeerX 10.1.1.221.1371. doi:10.1109/IJCNN.2011.6033571. ISBN 978-1-4244-9635-8. S2CID 5809822.

- ^ Zimek, Arthur; Filzmoser, Peter (2018). "There and back again: Outlier detection between statistical reasoning and data mining algorithms" (PDF). Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery. 8 (6): e1280. doi:10.1002/widm.1280. ISSN 1942-4787.

- ^ Knorr, E. M.; Ng, R. T.; Tucakov, V. (2000). "Distance-based outliers: Algorithms and applications". The VLDB Journal the International Journal on Very Large Data Bases. 8 (3–4): 237–253. CiteSeerX 10.1.1.43.1842. doi:10.1007/s007780050006. S2CID 11707259.

- ^ Ramaswamy, S.; Rastogi, R.; Shim, K. (2000). Efficient algorithms for mining outliers from large data sets. Proceedings of the 2000 ACM SIGMOD international conference on Management of data – SIGMOD '00. p. 427. doi:10.1145/342009.335437. ISBN 1-58113-217-4.

- ^ Angiulli, F.; Pizzuti, C. (2002). Fast Outlier Detection in High Dimensional Spaces. Principles of Data Mining and Knowledge Discovery. Lecture Notes in Computer Science. 2431. p. 15. doi:10.1007/3-540-45681-3_2. ISBN 978-3-540-44037-6.

- ^ Breunig, M. M.; Kriegel, H.-P.; Ng, R. T.; Sander, J. (2000). LOF: Identifying Density-based Local Outliers (PDF). Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data. SIGMOD. pp. 93–104. doi:10.1145/335191.335388. ISBN 1-58113-217-4.

- ^ Liu, Fei Tony; Ting, Kai Ming; Zhou, Zhi-Hua (December 2008). Isolation Forest. 2008 Eighth IEEE International Conference on Data Mining. pp. 413–422. doi:10.1109/ICDM.2008.17. ISBN 9780769535029. S2CID 6505449.

- ^ Liu, Fei Tony; Ting, Kai Ming; Zhou, Zhi-Hua (March 2012). "Isolation-Based Anomaly Detection". ACM Transactions on Knowledge Discovery from Data. 6 (1): 1–39. doi:10.1145/2133360.2133363. S2CID 207193045.

- ^ Schubert, E.; Zimek, A.; Kriegel, H. -P. (2012). "Local outlier detection reconsidered: A generalized view on locality with applications to spatial, video, and network outlier detection". Data Mining and Knowledge Discovery. 28: 190–237. doi:10.1007/s10618-012-0300-z. S2CID 19036098.

- ^ Kriegel, H. P.; Kröger, P.; Schubert, E.; Zimek, A. (2009). Outlier Detection in Axis-Parallel Subspaces of High Dimensional Data. Advances in Knowledge Discovery and Data Mining. Lecture Notes in Computer Science. 5476. p. 831. doi:10.1007/978-3-642-01307-2_86. ISBN 978-3-642-01306-5.

- ^ Kriegel, H. P.; Kroger, P.; Schubert, E.; Zimek, A. (2012). Outlier Detection in Arbitrarily Oriented Subspaces. 2012 IEEE 12th International Conference on Data Mining. p. 379. doi:10.1109/ICDM.2012.21. ISBN 978-1-4673-4649-8.

- ^ Fanaee-T, H.; Gama, J. (2016). "Tensor-based anomaly detection: An interdisciplinary survey". Knowledge-Based Systems. 98: 130–147. doi:10.1016/j.knosys.2016.01.027.

- ^ Zimek, A.; Schubert, E.; Kriegel, H.-P. (2012). "A survey on unsupervised outlier detection in high-dimensional numerical data". Statistical Analysis and Data Mining. 5 (5): 363–387. doi:10.1002/sam.11161.

- ^ Schölkopf, B.; Platt, J. C.; Shawe-Taylor, J.; Smola, A. J.; Williamson, R. C. (2001). "Estimating the Support of a High-Dimensional Distribution". Neural Computation. 13 (7): 1443–71. CiteSeerX 10.1.1.4.4106. doi:10.1162/089976601750264965. PMID 11440593. S2CID 2110475.

- ^ Jump up to: a b c Hawkins, Simon; He, Hongxing; Williams, Graham; Baxter, Rohan (2002). "Outlier Detection Using Replicator Neural Networks". Data Warehousing and Knowledge Discovery. Lecture Notes in Computer Science. 2454. pp. 170–180. CiteSeerX 10.1.1.12.3366. doi:10.1007/3-540-46145-0_17. ISBN 978-3-540-44123-6.

- ^ J. An and S. Cho, "Variational autoencoder based anomaly detection using reconstruction probability", 2015.

- ^ Malhotra, Pankaj; Vig, Lovekesh; Shroff, Gautman; Agarwal, Puneet (22–24 April 2015). Long Short Term Memory Networks for Anomaly Detection in Time Series. European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning. Bruges (Belgium).

- ^ He, Z.; Xu, X.; Deng, S. (2003). "Discovering cluster-based local outliers". Pattern Recognition Letters. 24 (9–10): 1641–1650. CiteSeerX 10.1.1.20.4242. doi:10.1016/S0167-8655(03)00003-5.

- ^ Campello, R. J. G. B.; Moulavi, D.; Zimek, A.; Sander, J. (2015). "Hierarchical Density Estimates for Data Clustering, Visualization, and Outlier Detection". ACM Transactions on Knowledge Discovery from Data. 10 (1): 5:1–51. doi:10.1145/2733381. S2CID 2887636.

- ^ Lazarevic, A.; Kumar, V. (2005). Feature bagging for outlier detection. Proc. 11th ACM SIGKDD International Conference on Knowledge Discovery in Data Mining. pp. 157–166. CiteSeerX 10.1.1.399.425. doi:10.1145/1081870.1081891. ISBN 978-1-59593-135-1. S2CID 2054204.

- ^ Nguyen, H. V.; Ang, H. H.; Gopalkrishnan, V. (2010). Mining Outliers with Ensemble of Heterogeneous Detectors on Random Subspaces. Database Systems for Advanced Applications. Lecture Notes in Computer Science. 5981. p. 368. doi:10.1007/978-3-642-12026-8_29. ISBN 978-3-642-12025-1.

- ^ Kriegel, H. P.; Kröger, P.; Schubert, E.; Zimek, A. (2011). Interpreting and Unifying Outlier Scores. Proceedings of the 2011 SIAM International Conference on Data Mining. pp. 13–24. CiteSeerX 10.1.1.232.2719. doi:10.1137/1.9781611972818.2. ISBN 978-0-89871-992-5.

- ^ Schubert, E.; Wojdanowski, R.; Zimek, A.; Kriegel, H. P. (2012). On Evaluation of Outlier Rankings and Outlier Scores. Proceedings of the 2012 SIAM International Conference on Data Mining. pp. 1047–1058. doi:10.1137/1.9781611972825.90. ISBN 978-1-61197-232-0.

- ^ Zimek, A.; Campello, R. J. G. B.; Sander, J. R. (2014). "Ensembles for unsupervised outlier detection". ACM SIGKDD Explorations Newsletter. 15: 11–22. doi:10.1145/2594473.2594476. S2CID 8065347.

- ^ Zimek, A.; Campello, R. J. G. B.; Sander, J. R. (2014). Data perturbation for outlier detection ensembles. Proceedings of the 26th International Conference on Scientific and Statistical Database Management – SSDBM '14. p. 1. doi:10.1145/2618243.2618257. ISBN 978-1-4503-2722-0.

- ^ Campos, Guilherme O.; Zimek, Arthur; Sander, Jörg; Campello, Ricardo J. G. B.; Micenková, Barbora; Schubert, Erich; Assent, Ira; Houle, Michael E. (2016). "On the evaluation of unsupervised outlier detection: measures, datasets, and an empirical study". Data Mining and Knowledge Discovery. 30 (4): 891. doi:10.1007/s10618-015-0444-8. ISSN 1384-5810. S2CID 1952214.

- ^ Anomaly detection benchmark data repository of the Ludwig-Maximilians-Universität München; Mirror at University of São Paulo.

- ^ Denning, D. E. (1987). "An Intrusion-Detection Model" (PDF). IEEE Transactions on Software Engineering. SE-13 (2): 222–232. CiteSeerX 10.1.1.102.5127. doi:10.1109/TSE.1987.232894. S2CID 10028835.

- ^ Teng, H. S.; Chen, K.; Lu, S. C. (1990). Adaptive real-time anomaly detection using inductively generated sequential patterns (PDF). Proceedings of the IEEE Computer Society Symposium on Research in Security and Privacy. pp. 278–284. doi:10.1109/RISP.1990.63857. ISBN 978-0-8186-2060-7. S2CID 35632142.

- ^ Jones, Anita K.; Sielken, Robert S. (1999). "Computer System Intrusion Detection: A Survey". Technical Report, Department of Computer Science, University of Virginia, Charlottesville, VA. CiteSeerX 10.1.1.24.7802.

- ^ Kubica, J.; Moore, A. (2003). "Probabilistic noise identification and data cleaning". Third IEEE International Conference on Data Mining. IEEE Comput. Soc: 131–138. doi:10.1109/icdm.2003.1250912. ISBN 0-7695-1978-4.

- Data mining

- Machine learning

- Data security

- Statistical outliers