Flow-based generative model

| Part of a series on |

| Machine learning and data mining |

|---|

|

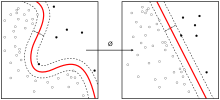

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow,[1] which is a statistical method using the change-of-variable law of probabilities to transform a simple distribution into a complex one.

The direct modeling of likelihood provides many advantages. For example, the negative log-likelihood can be directly computed and minimized as the loss function. Additionally, novel samples can be generated by sampling from the initial distribution, and applying the flow transformation.

In contrast, many alternative generative modeling methods such as variational autoencoder (VAE) and generative adversarial network do not explicitly represent the likelihood function.

Method[]

Let be a (possibly multivariate) random variable with distribution .

For , let be a sequence of random variables transformed from . The functions should be invertible, i.e. the inverse function exists. The final output models the target distribution.

The log likelihood of is (see derivation):

To efficiently compute the log likelihood, the functions should be 1. easy to invert, and 2. easy to compute the determinant of its Jacobian. In practice, the functions are modeled using deep neural networks, and are trained to minimize the negative log-likelihood of data samples from the target distribution. These architectures are usually designed such that only the forward pass of the neural network is required in both the inverse and the Jacobian determinant calculations. Examples of such architectures include NICE,[2] RealNVP,[3] and Glow.[4]

Derivation of log likelihood[]

Consider and . Note that .

By the change of variable formula, the distribution of is:

Where is the determinant of the Jacobian matrix of .

By the inverse function theorem:

By the identity (where is an invertible matrix), we have:

The log likelihood is thus:

In general, the above applies to any and . Since is equal to subtracted by a non-recursive term, we can infer by induction that:

Variants[]

Continuous Normalizing Flow (CNF)[]

Instead of constructing flow by function composition, another approach is to formulate the flow as a continuous-time dynamic.[5] Let be the latent variable with distribution . Map this latent variable to data space with the following flow function:

Where is an arbitrary function and can be modeled with e.g. neural networks.

The inverse function is then naturally:[5]

And the log-likelihood of can be found as:[5]

Because of the use of integration, techniques such as Neural ODE [6] may be needed in practice.

Applications[]

Flow-based generative models have been applied on a variety of modeling tasks, including:

- Audio generation[7]

- Image generation[4]

- Molecular graph generation[8]

- Point-cloud modeling[9]

- Video generation[10]

References[]

- ^ Danilo Jimenez Rezende; Mohamed, Shakir (2015). "Variational Inference with Normalizing Flows". arXiv:1505.05770 [stat.ML].

- ^ Dinh, Laurent; Krueger, David; Bengio, Yoshua (2014). "NICE: Non-linear Independent Components Estimation". arXiv:1410.8516 [cs.LG].

- ^ Dinh, Laurent; Sohl-Dickstein, Jascha; Bengio, Samy (2016). "Density estimation using Real NVP". arXiv:1605.08803 [cs.LG].

- ^ a b Kingma, Diederik P.; Dhariwal, Prafulla (2018). "Glow: Generative Flow with Invertible 1x1 Convolutions". arXiv:1807.03039 [stat.ML].

- ^ a b c Grathwohl, Will; Chen, Ricky T. Q.; Bettencourt, Jesse; Sutskever, Ilya; Duvenaud, David (2018). "FFJORD: Free-form Continuous Dynamics for Scalable Reversible Generative Models". arXiv:1810.01367 [cs.LG].

- ^ Chen, Ricky T. Q.; Rubanova, Yulia; Bettencourt, Jesse; Duvenaud, David (2018). "Neural Ordinary Differential Equations". arXiv:1806.07366 [cs.LG].

- ^ Ping, Wei; Peng, Kainan; Zhao, Kexin; Song, Zhao (2019). "WaveFlow: A Compact Flow-based Model for Raw Audio". arXiv:1912.01219 [cs.SD].

- ^ Shi, Chence; Xu, Minkai; Zhu, Zhaocheng; Zhang, Weinan; Zhang, Ming; Tang, Jian (2020). "GraphAF: A Flow-based Autoregressive Model for Molecular Graph Generation". arXiv:2001.09382 [cs.LG].

- ^ Yang, Guandao; Huang, Xun; Hao, Zekun; Liu, Ming-Yu; Belongie, Serge; Hariharan, Bharath (2019). "PointFlow: 3D Point Cloud Generation with Continuous Normalizing Flows". arXiv:1906.12320 [cs.CV].

- ^ Kumar, Manoj; Babaeizadeh, Mohammad; Erhan, Dumitru; Finn, Chelsea; Levine, Sergey; Dinh, Laurent; Kingma, Durk (2019). "VideoFlow: A Conditional Flow-Based Model for Stochastic Video Generation". arXiv:1903.01434 [cs.CV].

External links[]

- Machine learning

- Statistical models

- Probabilistic models

![{\displaystyle \log(p(x))=\log(p(z_{0}))-\int _{0}^{t}{\text{Tr}}\left[{\frac {\partial f}{\partial z_{t}}}dt\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a0e0f7d723db6c1821efadc5387b44f43cf4a7e7)