DALL-E

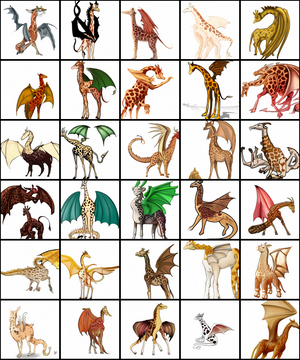

Images produced by DALL-E when given the text prompt "a professional high quality illustration of a giraffe dragon chimera. a giraffe imitating a dragon. a giraffe made of dragon." | |

| Original author(s) | OpenAI |

|---|---|

| Initial release | 5 January 2021 |

| Type | Transformer language model |

| Website | www |

| Part of a series on |

| Artificial intelligence |

|---|

|

DALL-E (stylized DALL·E) is an artificial intelligence program that creates images from textual descriptions, revealed by OpenAI on January 5, 2021.[1] It uses a 12-billion parameter[2] version of the GPT-3 Transformer model to interpret natural language inputs (such as "a green leather purse shaped like a pentagon" or "an isometric view of a sad capybara") and generate corresponding images.[3] It can create images of realistic objects ("a stained glass window with an image of a blue strawberry") as well as objects that do not exist in reality ("a cube with the texture of a porcupine").[4][5][6] Its name is a portmanteau of WALL-E and Salvador Dalí.[3][2]

Many neural nets from the 2000s onward have been able to generate realistic images.[3] DALL-E, however, is able to generate them from natural language prompts, which it "understands [...] and rarely fails in any serious way".[3]

DALL-E was developed and announced to the public in conjunction with CLIP (Contrastive Language-Image Pre-training),[1] a separate model whose role is to "understand and rank" its output.[3] The images that DALL-E generates are curated by CLIP, which presents the highest-quality images for any given prompt.[1] OpenAI has refused to release source code for either model; a "controlled demo" of DALL-E is available on OpenAI's website, where output from a limited selection of sample prompts can be viewed.[2] Open-source alternatives, trained on smaller amounts of data, like DALL-E Mini, have been released by communities.[7]

According to MIT Technology Review, one of OpenAI's objectives was to "give language models a better grasp of the everyday concepts that humans use to make sense of things".[1]

Architecture[]

The Generative Pre-trained Transformer (GPT) model was initially developed by OpenAI in 2018,[8] using the Transformer architecture. The first iteration, GPT, was scaled up to produce GPT-2 in 2019;[9] in 2020 it was scaled up again to produce GPT-3.[10][2][11]

DALL-E's model is a multimodal implementation of GPT-3[12] with 12 billion parameters[2] (scaled down from GPT-3's 175 billion)[10] which "swaps text for pixels", trained on text-image pairs from the Internet.[1] It uses zero-shot learning to generate output from a description and cue without further training.[13]

DALL-E generates large amounts of images in response to prompts. Another OpenAI model, CLIP, was developed in conjunction (and announced simultaneously) with DALL-E to "understand and rank" this output.[3] CLIP was trained on over 400 million pairs of images and text.[2] CLIP is an image recognition system;[14] however, unlike most classifier models, CLIP was not trained on curated datasets of labeled images (such as ImageNet), but instead on images and descriptions scraped from the Internet.[1] Rather than learn from a single label, CLIP associates images with entire captions.[1] CLIP was trained to predict which caption (out of a "random selection" of 32,768 possible captions) was most appropriate for an image, allowing it to subsequently identify objects in a wide variety of images outside its training set.[1]

Performance[]

DALL-E is capable of generating imagery in a variety of styles, from photorealistic imagery[2] to paintings and emoji. It can also "manipulate and rearrange" objects in its images.[2] One ability noted by its creators was the correct placement of design elements in novel compositions without explicit instruction: "For example, when asked to draw a daikon radish blowing its nose, sipping a latte, or riding a unicycle, DALL·E often draws the kerchief, hands, and feet in plausible locations."[15]

While DALL-E exhibited a wide variety of skills and abilities, on the release of its public demo, most coverage focused on a small subset of "surreal"[1] or "quirky"[16] output images. Specifically, DALL-E's output for "an illustration of a baby daikon radish in a tutu walking a dog" was mentioned in pieces from Input,[17] NBC,[18] Nature,[19] VentureBeat,[2] Wired,[20] CNN,[21] New Scientist[22] and the BBC;[23] its output for "an armchair in the shape of an avocado" was reported on by Wired,[20] VentureBeat,[2] New Scientist,[22] NBC,[18] MIT Technology Review,[1] CNBC,[16] CNN[21] and the BBC.[23] In contrast, DALL-E's unintentional development of visual reasoning skills sufficient to solve Raven's Matrices (visual tests often administered to humans to measure intelligence) was reported on by machine learning engineer Dale Markowitz in a piece for TheNextWeb.[24]

Nature introduced DALL-E as "an artificial-intelligence program that can draw pretty much anything you ask for".[19] TheNextWeb's Thomas Macaulay called its images "striking" and "seriously impressive", remarking on its ability to "create entirely new pictures by exploring the structure of a prompt — including fantastical objects combining unrelated ideas that it was never fed in training".[25] ExtremeTech said that "sometimes the renderings are little better than fingerpainting, but other times they’re startlingly accurate portrayals";[26] TechCrunch noted that, while DALL-E was "fabulously interesting and powerful work", it occasionally produced bizarre or incomprehensible output, and "many images it generates are more than a little… off":[3]

Saying “a green leather purse shaped like a pentagon” may produce what’s expected but “a blue suede purse shaped like a pentagon” might produce nightmare fuel. Why? It’s hard to say, given the black-box nature of these systems.[3]

Despite this, DALL-E was described as "remarkably robust to such changes" and reliable in producing images for a wide variety of arbitrary descriptions.[3] Sam Shead, reporting for CNBC, called its images "quirky" and quoted Neil Lawrence, a professor of machine learning at the University of Cambridge, who described it as an "inspirational demonstration of the capacity of these models to store information about our world and generalize in ways that humans find very natural". Shead also quoted Mark Riedl, an associate professor at the Georgia Tech School of Interactive Computing, as saying that DALL-E's demonstration results showed that it was able to "coherently blend concepts", a key element of human creativity, and that "the DALL-E demo is remarkable for producing illustrations that are much more coherent than other Text2Image systems I’ve seen in the past few years."[16] Riedl was also quoted by the BBC as saying that he was "impressed by what the system could do".[23]

DALL-E's ability to "fill in the blanks" and infer appropriate details without specific prompts was remarked on as well. ExtremeTech noted that a prompt to draw a penguin wearing a Christmas sweater produced images of penguins not also wearing sweaters, but also thematically related Santa hats,[26] and Engadget noted that appropriately-placed shadows appeared in output for the prompt "a painting of a fox sitting in a field during winter".[13] Furthermore, DALL-E exhibits broad understanding of visual and design trends; ExtremeTech said that "you can ask DALL-E for a picture of a phone or vacuum cleaner from a specified period of time, and it understands how those objects have changed".[26] Engadget also noted its unusual ability of "understanding how telephones and other objects change over time".[13] DALL-E has been described, along with other "narrow AI" like AlphaGo, AlphaFold and GPT-3 as "[generating] interest in whether and how artificial general intelligence may be achieved".[27]

Implications[]

OpenAI has refused to release the source code for DALL-E, or allow any use of it outside a small number of sample prompts;[2] OpenAI claimed that it planned to "analyze the societal impacts"[25] and "potential for bias" in models like DALL-E.[16] Despite the lack of access, at least one potential implication of DALL-E has been discussed, with several journalists and content writers mainly predicting that DALL-E could have effects on the field of journalism and content writing. Sam Shead's CNBC piece noted that some had concerns about the then-lack of a published paper describing the system, and that DALL-E had not been "opened sourced" [sic].[16]

While TechCrunch said "don’t write stock photography and illustration’s obituaries just yet",[3] Engadget said that "if developed further, DALL-E has vast potential to disrupt fields like stock photography and illustration, with all the good and bad that entails".[13]

In a Forbes opinion piece, venture capitalist Rob Toews said that DALL-E "presaged the dawn of a new AI paradigm known as ", in which systems would be capable of "interpreting, synthesizing and translating between multiple informational modalities"; he went on to say DALL-E demonstrated that "it is becoming harder and harder to deny that artificial intelligence is capable of creativity". Based on the sample prompts (which included clothed mannequins and items of furniture), he predicted that DALL-E might be used by fashion designers and furniture designers, but that "the technology is going to continue to improve rapidly".[28]

References[]

- ^ a b c d e f g h i j Heaven, Will Douglas (5 January 2021). "This avocado armchair could be the future of AI". MIT Technology Review. Retrieved 5 January 2021.

- ^ a b c d e f g h i j k Johnson, Khari (5 January 2021). "OpenAI debuts DALL-E for generating images from text". VentureBeat. Archived from the original on 5 January 2021. Retrieved 5 January 2021.

- ^ a b c d e f g h i j Coldewey, Devin (5 January 2021). "OpenAI's DALL-E creates plausible images of literally anything you ask it to". Archived from the original on 6 January 2021. Retrieved 5 January 2021.

- ^ Grossman, Gary (16 January 2021). "OpenAI's text-to-image engine, DALL-E, is a powerful visual idea generator". VentureBeat. Archived from the original on 26 February 2021. Retrieved 2 March 2021.

- ^ Andrei, Mihai (8 January 2021). "This AI module can create stunning images out of any text input". ZME Science. Archived from the original on 29 January 2021. Retrieved 2 March 2021.

- ^ Walsh, Bryan (5 January 2021). "A new AI model draws images from text". Axios. Retrieved 2 March 2021.

- ^ Dayma, Boris; Patil, Suraj; Cuenca, Pedro; Saifullah, Khalid; Abraham, Tanishq; Lê Khắc, Phúc; Melas, Luke; Ghosh, Ritobrata, DALL·E Mini, retrieved 2021-11-29

- ^ Radford, Alec; Narasimhan, Karthik; Salimans, Tim; Sutskever, Ilya (11 June 2018). "Improving Language Understanding by Generative Pre-Training" (PDF). OpenAI. p. 12. Archived (PDF) from the original on 26 January 2021. Retrieved 23 January 2021.

- ^ Radford, Alec; Wu, Jeffrey; Child, Rewon; Luan, David; Amodei, Dario; Sutskever, Ilua (14 February 2019). "Language models are unsupervised multitask learners" (PDF). 1 (8). Archived (PDF) from the original on 6 February 2021. Retrieved 19 December 2020. Cite journal requires

|journal=(help) - ^ a b Brown, Tom B.; Mann, Benjamin; Ryder, Nick; Subbiah, Melanie; Kaplan, Jared; Dhariwal, Prafulla; Neelakantan, Arvind; Shyam, Pranav; Sastry, Girish; Askell, Amanda; Agarwal, Sandhini; Herbert-Voss, Ariel; Krueger, Gretchen; Henighan, Tom; Child, Rewon; Ramesh, Aditya; Ziegler, Daniel M.; Wu, Jeffrey; Winter, Clemens; Hesse, Christopher; Chen, Mark; Sigler, Eric; Litwin, Mateusz; Gray, Scott; Chess, Benjamin; Clark, Jack; Berner, Christopher; McCandlish, Sam; Radford, Alec; Sutskever, Ilya; Amodei, Dario (July 22, 2020). "Language Models are Few-Shot Learners". arXiv:2005.14165 [cs.CL].

- ^ Ramesh, Aditya; Pavlov, Mikhail; Goh, Gabriel; Gray, Scott; Voss, Chelsea; Radford, Alec; Chen, Mark; Sutskever, Ilya (24 February 2021). "Zero-Shot Text-to-Image Generation". arXiv:2101.12092 [cs.LG].

- ^ Tamkin, Alex; Brundage, Miles; Clark, Jack; Ganguli, Deep (2021). "Understanding the Capabilities, Limitations, and Societal Impact of Large Language Models". arXiv:2102.02503 [cs.CL].

- ^ a b c d Dent, Steve (6 January 2021). "OpenAI's DALL-E app generates images from just a description". Engadget. Archived from the original on 27 January 2021. Retrieved 2 March 2021.

- ^ "For Its Latest Trick, OpenAI's GPT-3 Generates Images From Text Captions". Synced. 5 January 2021. Archived from the original on 6 January 2021. Retrieved 2 March 2021.

- ^ Dunn, Thom (10 February 2021). "This AI neural network transforms text captions into art, like a jellyfish Pikachu". BoingBoing. Archived from the original on 22 February 2021. Retrieved 2 March 2021.

- ^ a b c d e Shead, Sam (8 January 2021). "Why everyone is talking about an image generator released by an Elon Musk-backed A.I. lab". CNBC. Retrieved 2 March 2021.

- ^ Kasana, Mehreen (7 January 2021). "This AI turns text into surreal, suggestion-driven art". Input. Archived from the original on 29 January 2021. Retrieved 2 March 2021.

- ^ a b Ehrenkranz, Melanie (27 January 2021). "Here's DALL-E: An algorithm learned to draw anything you tell it". NBC News. Archived from the original on 20 February 2021. Retrieved 2 March 2021.

- ^ a b Stove, Emma (5 February 2021). "Tardigrade circus and a tree of life — January's best science images". Nature. Archived from the original on 8 March 2021. Retrieved 2 March 2021.

- ^ a b Knight, Will (26 January 2021). "This AI Could Go From 'Art' to Steering a Self-Driving Car". Wired. Archived from the original on 21 February 2021. Retrieved 2 March 2021.

- ^ a b Metz, Rachel (2 February 2021). "A radish in a tutu walking a dog? This AI can draw it really well". CNN. Retrieved 2 March 2021.

- ^ a b Stokel-Walker, Chris (5 January 2021). "AI illustrator draws imaginative pictures to go with text captions". New Scientist. Archived from the original on 28 January 2021. Retrieved 4 March 2021.

- ^ a b c Wakefield, Jane (6 January 2021). "AI draws dog-walking baby radish in a tutu". British Broadcasting Corporation. Archived from the original on 2 March 2021. Retrieved 3 March 2021.

- ^ Markowitz, Dale (10 January 2021). "Here's how OpenAI's magical DALL-E image generator works". TheNextWeb. Archived from the original on 23 February 2021. Retrieved 2 March 2021.

- ^ a b Macaulay, Thomas (6 January 2021). "Say hello to OpenAI's DALL-E, a GPT-3-powered bot that creates weird images from text". TheNextWeb. Archived from the original on 28 January 2021. Retrieved 2 March 2021.

- ^ a b c Whitwam, Ryan (6 January 2021). "OpenAI's 'DALL-E' Generates Images From Text Descriptions". ExtremeTech. Archived from the original on 28 January 2021. Retrieved 2 March 2021.

- ^ Nichele, Stefano (2021). "Tim Taylor and Alan Dorin: Rise of the self-replicators—early visions of machines, AI and robots that can reproduce and evolve". Genetic Programming and Evolvable Machines. 22: 141–145. doi:10.1007/s10710-021-09398-5. S2CID 231930573.

- ^ Toews, Rob (18 January 2021). "AI And Creativity: Why OpenAI's Latest Model Matters". Forbes. Archived from the original on 12 February 2021. Retrieved 2 March 2021.

- Applied machine learning

- Artificial intelligence

- Computational linguistics

- Language modeling

- Natural language generation

- Natural language processing

- Neural network software

- Open-source artificial intelligence

- Software using the MIT license

- Unsupervised learning