Layer (deep learning)

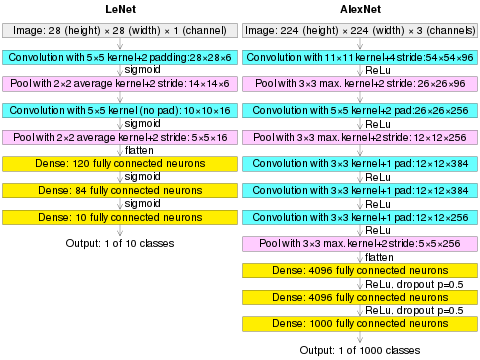

A layer in a deep learning model is a structure or network topology in the architecture of the model, which take information from the previous layers and then pass information to the next layer. There are several famous layers in deep learning, namely convolutional layer[1] and maximum pooling layer[2][3] in the convolutional neural network. Fully connected layer and ReLU layer in vanilla neural network. RNN layer in the RNN model[4][5][6] and deconvolutional layer in autoencoder etc.

Differences with layers of the neocortex[]

There is an intrinsic difference between deep learning layering and neocortical layering: deep learning layering depends on network topology, while neocortical layering depends on intra-layers homogeneity.

Dense layer[]

Dense layer, also called fully-connected layer, refers to the layer whose inside neurons connect to every neuron in the preceding layer.[7][8][9][10]

See also[]

- Deep Learning

- Neocortex#Layers

References[]

- ^ Habibi, Aghdam, Hamed (2017-05-30). Guide to convolutional neural networks : a practical application to traffic-sign detection and classification. Heravi, Elnaz Jahani. Cham, Switzerland. ISBN 9783319575490. OCLC 987790957.

- ^ Yamaguchi, Kouichi; Sakamoto, Kenji; Akabane, Toshio; Fujimoto, Yoshiji (November 1990). A Neural Network for Speaker-Independent Isolated Word Recognition. First International Conference on Spoken Language Processing (ICSLP 90). Kobe, Japan.

- ^ Ciresan, Dan; Meier, Ueli; Schmidhuber, Jürgen (June 2012). Multi-column deep neural networks for image classification. 2012 IEEE Conference on Computer Vision and Pattern Recognition. New York, NY: Institute of Electrical and Electronics Engineers (IEEE). pp. 3642–3649. arXiv:1202.2745. CiteSeerX 10.1.1.300.3283. doi:10.1109/CVPR.2012.6248110. ISBN 978-1-4673-1226-4. OCLC 812295155. S2CID 2161592.

- ^ Dupond, Samuel (2019). "A thorough review on the current advance of neural network structures". Annual Reviews in Control. 14: 200–230.

- ^ Abiodun, Oludare Isaac; Jantan, Aman; Omolara, Abiodun Esther; Dada, Kemi Victoria; Mohamed, Nachaat Abdelatif; Arshad, Humaira (2018-11-01). "State-of-the-art in artificial neural network applications: A survey". Heliyon. 4 (11): e00938. doi:10.1016/j.heliyon.2018.e00938. ISSN 2405-8440. PMC 6260436. PMID 30519653.

- ^ Tealab, Ahmed (2018-12-01). "Time series forecasting using artificial neural networks methodologies: A systematic review". Future Computing and Informatics Journal. 3 (2): 334–340. doi:10.1016/j.fcij.2018.10.003. ISSN 2314-7288.

- ^ "CS231n Convolutional Neural Networks for Visual Recognition". CS231n Convolutional Neural Networks for Visual Recognition. 10 May 2016. Retrieved 27 Apr 2021.

Fully-connected layer: Neurons in a fully connected layer have full connections to all activations in the previous layer, as seen in regular Neural Networks.

- ^ "Convolutional Neural Network. In this article, we will see what are… - by Arc". Medium. 26 Dec 2018. Retrieved 27 Apr 2021.

Fully Connected Layer is simply, feed forward neural networks.

- ^ "Fully connected layer". MATLAB. 1 Mar 2021. Retrieved 27 Apr 2021.

A fully connected layer multiplies the input by a weight matrix and then adds a bias vector.

- ^ Géron, Aurélien (2019). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow : concepts, tools, and techniques to build intelligent systems. Sebastopol, CA: O'Reilly Media, Inc. p. 322 - 323. ISBN 978-1-4920-3264-9. OCLC 1124925244.

- Artificial neural networks